Stateflow Overview (Previous Version: R2013a )

Design and simulate state charts using Stateflow. For an updated version of this video, visit: https://youtu.be/TuL8cFqDu6A

From playlist Event-Based Modeling

Model-Based Design for Predictive Maintenance, Part 5: Development of a Predictive Model

See the full playlist: https://www.youtube.com/playlist?list=PLn8PRpmsu08qe_LVgUHtDrSXiNz6XFcS0 After performing real-time tests and validating your algorithm, you can use it to detect whether there are any mechanical or electrical issues in your system. However, you can also use condition

From playlist Model-Based Design for Predictive Maintenance

Introduction to Random Signal Representation

http://AllSignalProcessing.com for more great signal-processing content: ad-free videos, concept/screenshot files, quizzes, MATLAB and data files. Introduction to the concept of a random signal, then review of probability density functions, mean, and variance for scalar quantities.

From playlist Random Signal Characterization

From playlist COMP0168 (2020/21)

Predictive Maintenance with MATLAB and Simulink

Companies that make industrial equipment are storing large amounts of machine data, with the notion that they will be able to extract value from it in the future. However, using this data to build accurate and robust models for prediction requires a rare combination of equipment, expertise

From playlist Predictive maintenance

Random Processes and Stationarity

http://AllSignalProcessing.com for more great signal-processing content: ad-free videos, concept/screenshot files, quizzes, MATLAB and data files. Introduction to describing random processes using first and second moments (mean and autocorrelation/autocovariance). Definition of a stationa

From playlist Random Signal Characterization

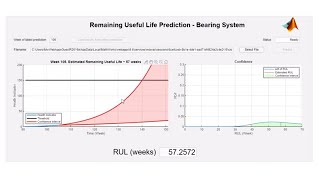

Model-Based Design for Predictive Maintenance, Part 6: Deployment of a Predictive Model

See the full playlist: https://www.youtube.com/playlist?list=PLn8PRpmsu08qe_LVgUHtDrSXiNz6XFcS0 This video shows how prognostics models work, how they perform, and how you can deploy them. You’ll learn how to deploy a remaining useful life estimation model either as a standalone applicatio

From playlist Model-Based Design for Predictive Maintenance

DeepMind x UCL RL Lecture Series - Deep Reinforcement Learning #2 [13/13]

Research Engineer Matteo Hessel covers general value functions, GVFs as auxiliary tasks, and explains how to deal with scaling issues in algorithms. Slides: https://dpmd.ai/deeprl2 Full video lecture series: https://dpmd.ai/DeepMindxUCL21

From playlist Learning resources

Stanford CS330: Multi-Task and Meta-Learning, 2019 | Lecture 8 - Model-Based Reinforcement Learning

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai Assistant Professor Chelsea Finn, Stanford University http://cs330.stanford.edu/

From playlist Stanford CS330: Deep Multi-Task and Meta Learning

JEPA - A Path Towards Autonomous Machine Intelligence (Paper Explained)

#jepa #ai #machinelearning Yann LeCun's position paper on a path towards machine intelligence combines Self-Supervised Learning, Energy-Based Models, and hierarchical predictive embedding models to arrive at a system that can teach itself to learn useful abstractions at multiple levels a

From playlist Papers Explained

Using Deep Reinforcement Learning to Uncover the Decision-Making Mechanisms - L. Cross - 10/25/2019

"Using Deep Reinforcement Learning to Uncover the Decision-Making Mechanisms of the Brain." AI-4-Science Workshop, October 25, 2019 at Bechtel Residence Dining Hall, Caltech. Learn more about: - AI-4-science: https://www.ist.caltech.edu/ai4science/ - Events: https://www.ist.caltech.edu/

From playlist AI-4-Science Workshop

End-to-End Differentiable Proving: Tim Rocktäschel, University of Oxford

We introduce neural networks for end-to-end differentiable proving of queries to knowledge bases by operating on dense vector representations of symbols. These neural networks are constructed recursively by taking inspiration from the backward chaining algorithm as used in Prolog. Specific

From playlist Logic and learning workshop

A Deep Dive into NLP with PyTorch

In this tutorial, we will give you some deeper insights into recent developments in the field of Deep Learning NLP. The first part of the workshop will be an introduction into the dynamic deep learning library PyTorch. We will explain the key steps for building a basic model. In the second

From playlist Machine Learning

The video explains MuZero! MuZero makes AlphaZero more general by constructing representation and dynamics models such that it can play games without a perfect model of the environment. This dynamics function is unique because of the way it's hidden state is tied into the policy and value

From playlist Game Playing AI: From AlphaGo to MuZero

Speakers | Stanford CS224U Natural Language Understanding | Spring 2021

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai To learn more about this course visit: https://online.stanford.edu/courses/cs224u-natural-language-understanding To follow along with the course schedule and s

From playlist Stanford CS224U: Natural Language Understanding | Spring 2021

Stanford CS224N: NLP with Deep Learning | Winter 2019 | Lecture 13 – Contextual Word Embeddings

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/30j472S Professor Christopher Manning, Stanford University http://onlinehub.stanford.edu/ Professor Christopher Manning Thomas M. Siebel Professor in Machine Lear

From playlist Stanford CS224N: Natural Language Processing with Deep Learning Course | Winter 2019

Lecture 14 – Contextual Vectors | Stanford CS224U: Natural Language Understanding | Spring 2019

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai Professor Christopher Potts & Consulting Assistant Professor Bill MacCartney, Stanford University http://onlinehub.stanford.edu/ Professor Christopher Potts Pr

From playlist Stanford CS224U: Natural Language Understanding | Spring 2019

Model-Based Design for Predictive Maintenance, Part 2: Feature Extraction

See the full playlist: https://www.youtube.com/playlist?list=PLn8PRpmsu08qe_LVgUHtDrSXiNz6XFcS0 Learn how to extract useful condition indicators of your system. Condition indicators are important, as they can help you build both a classification model and a prognostic model. This video wal

From playlist Model-Based Design for Predictive Maintenance