This video introduces the topic of derangements of elements. mathispower4u.com

From playlist Counting (Discrete Math)

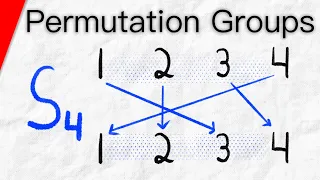

301.5C Definition and "Stack Notation" for Permutations

What are permutations? They're *bijective functions* from a finite set to itself. They form a group under function composition, and we use "stack notation" to denote them in this video.

From playlist Modern Algebra - Chapter 16 (permutations)

Introduction to this lecture series on perioperative management.

From playlist Perioperative Patient Care _ Demo

This project was created with Explain Everything™ Interactive Whiteboard for iPad.

From playlist Modern Algebra - Chapter 16 (permutations)

Permutation Groups and Symmetric Groups | Abstract Algebra

We introduce permutation groups and symmetric groups. We cover some permutation notation, composition of permutations, composition of functions in general, and prove that the permutations of a set make a group (with certain details omitted). #abstractalgebra #grouptheory We will see the

From playlist Abstract Algebra

From playlist Week 2: Language Modeling

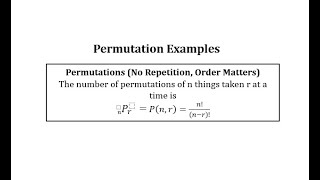

Ex: Evaluate a Combination and a Permutation - (n,r)

This video explains how to evaluate a combination and a permutation with the same value of n and r. Site: http://mathispower4u.com

From playlist Permutations and Combinations

Natural Language Generation Metrics | Stanford CS224U Natural Language Understanding | Spring 2021

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai To learn more about this course visit: https://online.stanford.edu/courses/cs224u-natural-language-understanding To follow along with the course schedule and s

From playlist Stanford CS224U: Natural Language Understanding | Spring 2021

How you can use Perplexity to see how good a Language Model is [Lecture]

This is a single lecture from a course. If you you like the material and want more context (e.g., the lectures that came before), check out the whole course: https://boydgraber.org/teaching/CMSC_723/ (Including homeworks and reading.) Music: https://soundcloud.com/alvin-grissom-ii/review

From playlist Computational Linguistics I

Language models are often evaluated with a metric called Perplexity. Feeling perplexed about it? Watch this video to get it all explained. This video is part of the Hugging Face course: http://huggingface.co/course Open in colab to run the code samples: https://colab.research.google.com/

From playlist Hugging Face Course Chapter 7

ChatGPT Alternative: Perplexity by Perplexity.AI

Perplexity.AI, a new conversational search engine! Combining GPT-3.x with BING, this Perplexity gives us an idea, what ChatGPT w/ BING will be like later on w/ Microsoft. Try it out today at Perplexity.AI, it's free! perplexity.ai ask anything: https://www.perplexity.ai/ Another referenc

From playlist Large Language Models - ChatGPT, GPT-4, BioGPT and BLOOM LLM explained and working code examples

"Watermarking Language Models" paper and GPTZero EXPLAINED | How to detect text by ChatGPT?

Did ChatGPT write this text? We explain two ways of knowing whether AI has written a text: GPTZero and Watermarking language models. ► Sponsor: Cohere 👉 https://t1p.de/22srn Check out our daily #MachineLearning Quiz Questions: https://www.youtube.com/c/AICoffeeBreak/community 📜 Watermar

From playlist Explained AI/ML in your Coffee Break

Lecture 03 Perioperative management of the diabetic patient part 1

We move on to the perioperative care of the diabetic patient (part 1).

From playlist Perioperative Patient Care _ Demo

Professor Povey's perplexing puzzle - Can you solve it? | Alex Bellos puzzles

Take one coin and one infinite chessboard and what do you get? A perplexing mathematical puzzle, that’s what! Subscribe to Guardian Science and Tech ► http://bit.ly/substech Click here for a written version of the puzzle ► http://www.theguardian.com/science/2015/aug/31/can-you-solve-it-pr

From playlist Alex Bellos's Monday Puzzle

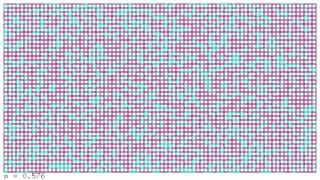

Bond percolation on a square lattice. Each edge of the lattice is open with probability p, independently of all others. p is varied from 0 to 1. For more details on the simulations, see http://www.univ-orleans.fr/mapmo/membres/berglund/ressim.html

From playlist Percolation

ALiBi - Train Short, Test Long: Attention with linear biases enables input length extrapolation

#alibi #transformers #attention Transformers are essentially set models that need additional inputs to make sense of sequence data. The most widespread additional inputs are position encodings or position embeddings, which add sequence index information in various forms. However, this has

From playlist Papers Explained