logarithm with negative base and negative input

What if we have both a negative base and a negative input in a logarithm! People often say we cannot have a negative number inside of a logarithm. Most of the time log(negative) gives us an imaginary number. But since (-2)^3=-8, so what do you think the answer to log base -2 of -8? Check

From playlist math for fun, complex world

Can you evaluate a log for a negative number

👉 Learn all about the properties of logarithms. The logarithm of a number say a to the base of another number say b is a number say n which when raised as a power of b gives a. (i.e. log [base b] (a) = n means that b^n = a). The logarithm of a negative number is not defined. (i.e. it is no

From playlist Rules of Logarithms

#38. Based on the scatterplot, what is the linear correlation coefficient?

Please Subscribe here, thank you!!! https://goo.gl/JQ8Nys #38. Based on the scatterplot, what is the linear correlation coefficient?

From playlist Statistics Final Exam

Ex 2: Find a Z-score Given the Probabilty of Z Being Greater Than a Given Value

This video explains how to use a TI84 to determine a z-score given the probability of the z-score being greater than unknown z-score. http://mathispower4u.com

From playlist The Normal Distribution

Prob & Stats - Bayes Theorem (15 of 24) What is Negative Predictive Value (NPV)?

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain what is the negative predictive value (NPV). NPV is the probability that a patient with a negative test result is actually free from the disease (or the tested condition). NPV equals the propo

From playlist PROB & STATS 4 BAYES THEOREM

Evaluating logarithms without a calculator

👉 Learn all about the properties of logarithms. The logarithm of a number say a to the base of another number say b is a number say n which when raised as a power of b gives a. (i.e. log [base b] (a) = n means that b^n = a). The logarithm of a negative number is not defined. (i.e. it is no

From playlist Rules of Logarithms

Ex 3: Find the Probability of a Z-score Being Between Two Z-score on a Newer TI84

This video explains how to use a newer TI84 graphing calculator to determine the probability that a z-score is between two given z-scores for a standard normal distribution. http://mathispower4u.com

From playlist The Normal Distribution

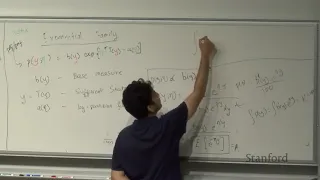

Stanford CS229: Machine Learning | Summer 2019 | Lecture 6 - Exponential Family & GLM

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3Eb7mIi Anand Avati Computer Science, PhD To follow along with the course schedule and syllabus, visit: http://cs229.stanford.edu/syllabus-summer2019.html

From playlist Stanford CS229: Machine Learning Course | Summer 2019 (Anand Avati)

From playlist COMP0168 (2020/21)

Normal Distribution: Find Probability Using With Z-scores Using Tables

This lesson explains how to use tables to determine the probability a data value will have a z-score more than or less and a given z-score. It also shows how to determine the probability between two z-scores. Site: http://mathispower4u.com

From playlist The Normal Distribution

Lecture 9.5 — The Bayesian interpretation of weight decay [Neural Networks for Machine Learning]

Lecture from the course Neural Networks for Machine Learning, as taught by Geoffrey Hinton (University of Toronto) on Coursera in 2012. Link to the course (login required): https://class.coursera.org/neuralnets-2012-001

From playlist [Coursera] Neural Networks for Machine Learning — Geoffrey Hinton

Lecture 9E : The Bayesian interpretation of weight decay

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 9E : The Bayesian interpretation of weight decay

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Stanford CS229: Machine Learning | Summer 2019 | Lecture 3 - Probability and Statistics

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3potDOW Anand Avati Computer Science, PhD To follow along with the course schedule and syllabus, visit: http://cs229.stanford.edu/syllabus-summer2019.html

From playlist Stanford CS229: Machine Learning Course | Summer 2019 (Anand Avati)

Singular Learning Theory - Seminar 7 - Asymptotics of the free energy

This seminar series is an introduction to Watanabe's Singular Learning Theory, a theory about algebraic geometry and statistical learning theory. In this seminar Edmund Lau starts the presentation of how to prove the asymptotic formula for the free energy in terms of the "loss" and "entrop

From playlist Singular Learning Theory

When you cannot evaluate a log

👉 Learn all about the properties of logarithms. The logarithm of a number say a to the base of another number say b is a number say n which when raised as a power of b gives a. (i.e. log [base b] (a) = n means that b^n = a). The logarithm of a negative number is not defined. (i.e. it is no

From playlist Rules of Logarithms

Jonas Wallin: Scaling of scoring rules

CIRM VIRTUAL EVENT Recorded during the meeting "Mathematical Methods of Modern Statistics 2" the June 02, 2020 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians

From playlist Virtual Conference

Locally Weighted & Logistic Regression | Stanford CS229: Machine Learning - Lecture 3 (Autumn 2018)

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/2ZdTL4x Andrew Ng Adjunct Professor of Computer Science https://www.andrewng.org/ To follow along with the course schedule and syllabus, visit: http://cs229.sta

From playlist Stanford CS229: Machine Learning Full Course taught by Andrew Ng | Autumn 2018

Overview of log properties - Inverse properties

👉 Learn all about the properties of logarithms. The logarithm of a number say a to the base of another number say b is a number say n which when raised as a power of b gives a. (i.e. log [base b] (a) = n means that b^n = a). The logarithm of a negative number is not defined. (i.e. it is no

From playlist Rules of Logarithms

From playlist Plenary talks One World Symposium 2020