Understanding and calculating probabilities involving the difference of sample proportions using the joint distribution of the difference of sampling distributions of proportions

From playlist Unit 7 Probability C: Sampling Distributions & Simulation

How to find the probability of consecutive events

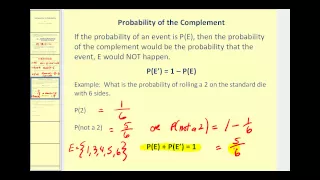

👉 Learn how to find the conditional probability of an event. Probability is the chance of an event occurring or not occurring. The probability of an event is given by the number of outcomes divided by the total possible outcomes. Conditional probability is the chance of an event occurring

From playlist Probability

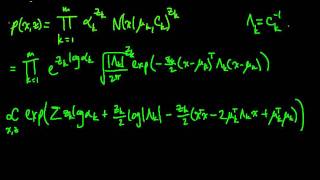

(ML 16.7) EM for the Gaussian mixture model (part 1)

Applying EM (Expectation-Maximization) to estimate the parameters of a Gaussian mixture model. Here we use the alternate formulation presented for (unconstrained) exponential families.

From playlist Machine Learning

Learn to find the or probability from a tree diagram

👉 Learn how to find the conditional probability of an event. Probability is the chance of an event occurring or not occurring. The probability of an event is given by the number of outcomes divided by the total possible outcomes. Conditional probability is the chance of an event occurring

From playlist Probability

(PP 5.4) Independence, Covariance, and Correlation

(0:00) Definition of independent random variables. (5:10) Characterizations of independence. (10:54) Definition of covariance. (13:10) Definition of correlation. A playlist of the Probability Primer series is available here: http://www.youtube.com/view_play_list?p=17567A1A3F5DB5E4

From playlist Probability Theory

This video introduces probability and determine the probability of basic events. http://mathispower4u.yolasite.com/

From playlist Counting and Probability

Covariance (1 of 17) What is Covariance? in Relation to Variance and Correlation

Visit http://ilectureonline.com for more math and science lectures! To donate:a http://www.ilectureonline.com/donate https://www.patreon.com/user?u=3236071 We will learn the difference between the variance and the covariance. A variance (s^2) is a measure of how spread out the numbers of

From playlist COVARIANCE AND VARIANCE

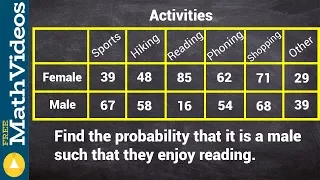

Finding the conditional probability from a two way frequency table

👉 Learn how to find the conditional probability of an event. Probability is the chance of an event occurring or not occurring. The probability of an event is given by the number of outcomes divided by the total possible outcomes. Conditional probability is the chance of an event occurring

From playlist Probability

(ML 16.6) Gaussian mixture model (Mixture of Gaussians)

Introduction to the mixture of Gaussians, a.k.a. Gaussian mixture model (GMM). This is often used for density estimation and clustering.

From playlist Machine Learning

Clustering (4): Gaussian Mixture Models and EM

Gaussian mixture models for clustering, including the Expectation Maximization (EM) algorithm for learning their parameters.

From playlist cs273a

Mixture Models 5: how many Gaussians?

Full lecture: http://bit.ly/EM-alg How many components should we use in our mixture model? We can cross-validate to optimise the likelihood (or some other objective function). We can also use Occam's razor, formalised as the Bayes Information Criterion (BIC) or Akaike Information Criterio

From playlist Mixture Models

Clustering and Classification: Advanced Methods, Part 2

Data Science for Biologists Clustering and Classification: Advanced Methods Part 2 Course Website: data4bio.com Instructors: Nathan Kutz: faculty.washington.edu/kutz Bing Brunton: faculty.washington.edu/bbrunton Steve Brunton: faculty.washington.edu/sbrunton

From playlist Data Science for Biologists

Learning probability distributions; What can, What can't be done - Shai Ben-David

Seminar on Theoretical Machine Learning Topic: Learning probability distributions; What can, What can't be done Speaker: Shai Ben-David Affiliation: University of Waterloo Date: May 7, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

Sylvia Frühwirth-Schnatter: Bayesian econometrics in the Big Data Era

Abstract: Data mining methods based on finite mixture models are quite common in many areas of applied science, such as marketing, to segment data and to identify subgroups with specific features. Recent work shows that these methods are also useful in micro econometrics to analyze the beh

From playlist Probability and Statistics

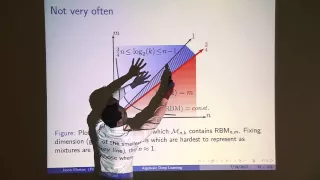

Jason Morton: "An Algebraic Perspective on Deep Learning, Pt. 3"

Graduate Summer School 2012: Deep Learning, Feature Learning "An Algebraic Perspective on Deep Learning, Pt. 3" Jason Morton, Pennsylvania State University Institute for Pure and Applied Mathematics, UCLA July 20, 2012 For more information: https://www.ipam.ucla.edu/programs/summer-scho

From playlist GSS2012: Deep Learning, Feature Learning

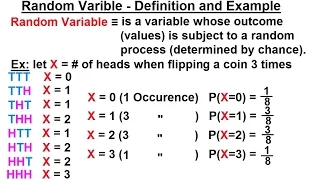

Prob & Stats - Random Variable & Prob Distribution (1 of 53) Random Variable

Visit http://ilectureonline.com for more math and science lectures! In this video I will define and gives an example of what is a random variable. Next video in series: http://youtu.be/aEB07VIIfKs

From playlist iLecturesOnline: Probability & Stats 2: Random Variable & Probability Distribution

Learning from Multiple Biased Sources - Clayton Scott

Seminar on Theoretical Machine Learning Topic: Learning from Multiple Biased Sources Speaker: Clayton Scott Affiliation: University of Michigan Date: February 25, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics