SYN_018 - Linguistic Micro-Lectures: Recursion

In this short micro-lecture, Victoria Galarneau, one of Prof. Handke's students, discusses the term 'recursion', a central notion in syntax.

From playlist Micro-Lectures - Syntax

From playlist Week 4 2015 Shorts

A Beginner's Guide to Recursion

Recursion has an intimidating reputation for being the advanced skill of coding sorcerers. But in this tutorial we look behind the curtain of this formidable technique to discover the simple ideas under it. Through live coding demos in the interactive shell, we'll answer the following que

From playlist Software Development

Recursive Functions (Discrete Math)

This video introduces recursive formulas.

From playlist Functions (Discrete Math)

Sequences: Introduction to Solving Recurrence Relations

This video introduces solving recurrence relations by the methods of inspection, telescoping, and characteristic root technique. mathispower4u.com

From playlist Sequences (Discrete Math)

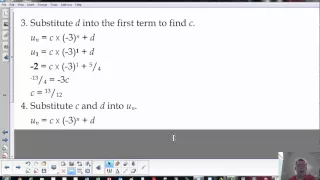

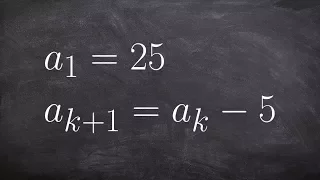

Applying the recursive formula to a sequence to determine the first five terms

👉 Learn all about recursive sequences. Recursive form is a way of expressing sequences apart from the explicit form. In the recursive form of defining sequences, each term of a sequence is expressed in terms of the preceding term unlike in the explicit form where each term is expressed in

From playlist Sequences

Building Your Own Dynamic Language

February 14, 2007 lecture by Ian Piumarta for the Stanford University Computer Systems Colloquium (EE 380). Ian describes several significant aspects of the design and implementation of a programming environment that, along with a programming language, exhibits the properties desired of th

From playlist Course | Computer Systems Laboratory Colloquium (2006-2007)

Lecture 14: Tree Recursive Neural Networks and Constituency Parsing

Lecture 14 looks at compositionality and recursion followed by structure prediction with simple Tree RNN: Parsing. Research highlight ""Deep Reinforcement Learning for Dialogue Generation"" is covered is backpropagation through Structure. Key phrases: RNN, Recursive Neural Networks, MV-RN

From playlist Lecture Collection | Natural Language Processing with Deep Learning (Winter 2017)

Parser and Lexer — How to Create a Compiler part 1/5 — Converting text into an Abstract Syntax Tree

In this tool-assisted education video I create a parser in C++ for a B-like programming language using GNU Bison. For the lexicographical analysis, a lexer is generated using re2c. This is part of a multi-episode series. In the next video, we will focus on optimization. Become a member:

From playlist Creating a Compiler

Python - Analyzing Sentence Structure

Lecturer: Dr. Erin M. Buchanan Summer 2019 https://www.patreon.com/statisticsofdoom This video covers how to analyze sentence structure using Python and nltk. This video scales up the parsing discussion from the previous chapter, looking more at parse trees and how to parse effectively. Y

From playlist Natural Language Processing

R & Python - Parsing Part 1 (2022)

Lecturer: Dr. Erin M. Buchanan Spring 2022 https://www.patreon.com/statisticsofdoom This video is part of my Natural Language Processing course. This video covers parsing, which is creating sentence structure for understanding meaning. You will learn both traditional constituency parsing

From playlist Natural Language Processing

Stanford CS224N: NLP with Deep Learning | Winter 2019 | Lecture 18 – Constituency Parsing, TreeRNNs

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3wL2FCD Professor Christopher Manning, Stanford University http://onlinehub.stanford.edu/ Professor Christopher Manning Thomas M. Siebel Professor in Machine Lear

From playlist Stanford CS224N: Natural Language Processing with Deep Learning Course | Winter 2019

How to use the recursive formula to evaluate the first five terms

👉 Learn all about recursive sequences. Recursive form is a way of expressing sequences apart from the explicit form. In the recursive form of defining sequences, each term of a sequence is expressed in terms of the preceding term unlike in the explicit form where each term is expressed in

From playlist Sequences

Semantics of Higher Inductive Types - Michael Shulman

Semantics of Higher Inductive Types Michael Shulman University of California, San Diego; Member, School of Mathematics February 27, 2013

From playlist Mathematics

Deep Learning 6: Deep Learning for NLP

From playlist Learning resources

MountainWest RubyConf 2015 - Standing on the Shoulders of Giants

by Ryan Davis Ruby is a fantastic language, but it could be better. While it has done a terrific job of taking ideas from languages like smalltalk, lisp, and (to an extent) perl, it hasn’t done nearly enough of it. Big thinkers like Alan Kay & Dan Ingalls (smalltalk), David Ungar (self), G

From playlist MWRC 2015

Applying the recursive formula to a geometric sequence

👉 Learn all about recursive sequences. Recursive form is a way of expressing sequences apart from the explicit form. In the recursive form of defining sequences, each term of a sequence is expressed in terms of the preceding term unlike in the explicit form where each term is expressed in

From playlist Sequences

DeepMind x UCL | Deep Learning Lectures | 9/12 | Generative Adversarial Networks

Generative adversarial networks (GANs), first proposed by Ian Goodfellow et al. in 2014, have emerged as one of the most promising approaches to generative modeling, particularly for image synthesis. In their most basic form, they consist of two "competing" networks: a generator which trie

From playlist Learning resources