(ML 7.7.A1) Dirichlet distribution

Definition of the Dirichlet distribution, what it looks like, intuition for what the parameters control, and some statistics: mean, mode, and variance.

From playlist Machine Learning

(ML 7.8) Dirichlet-Categorical model (part 2)

The Dirichlet distribution is a conjugate prior for the Categorical distribution (i.e. a PMF a finite set). We derive the posterior distribution and the (posterior) predictive distribution under this model.

From playlist Machine Learning

Value distribution of long Dirichlet polynomials and applications to the Riemann...-Maksym Radziwill

Maksym Radziwill Value distribution of long Dirichlet polynomials and applications to the Riemann zeta-function Stanford University; Member, School of Mathematics October 1, 2013 For more videos, visit http://video.ias.edu

From playlist Mathematics

(ML 7.7) Dirichlet-Categorical model (part 1)

The Dirichlet distribution is a conjugate prior for the Categorical distribution (i.e. a PMF a finite set). We derive the posterior distribution and the (posterior) predictive distribution under this model.

From playlist Machine Learning

Multivariate Gaussian distributions

Properties of the multivariate Gaussian probability distribution

From playlist cs273a

Topic Models: Variational Inference for Latent Dirichlet Allocation (with Xanda Schofield)

This is a single lecture from a course. If you you like the material and want more context (e.g., the lectures that came before), check out the whole course: https://sites.google.com/umd.edu/2021cl1webpage/ (Including homeworks and reading.) Xanda's Webpage: https://www.cs.hmc.edu/~xanda

From playlist Computational Linguistics I

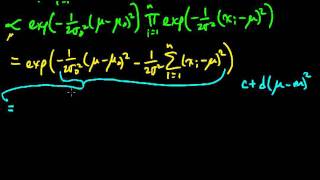

(ML 7.10) Posterior distribution for univariate Gaussian (part 2)

Computing the posterior distribution for the mean of the univariate Gaussian, with a Gaussian prior (assuming known prior mean, and known variances). The posterior is Gaussian, showing that the Gaussian is a conjugate prior for the mean of a Gaussian.

From playlist Machine Learning

Index theorems for nodal count and a lateral variation principle - Gregory Berkolaiko

Analysis Seminar Topic: Index theorems for nodal count and a lateral variation principle Speaker: Gregory Berkolaiko Affiliation: Texas A&M University Date: February 01, 2021 For more video please visit http://video.ias.edu

From playlist Mathematics

Jeremy Quastel (Toronto) -- Convergence of finite range exclusions to the KPZ fixed point

We will describe a method of comparison with TASEP which proves that both the KPZ equation and finite range exclusion models converge to the KPZ fixed point. For the KPZ equation and the nearest neighbour exclusion, the initial data is allowed to be a continuous function plus a finite num

From playlist Columbia Probability Seminar

Continuous Distributions: Beta and Dirichlet Distributions

Video Lecture from the course INST 414: Advanced Data Science at UMD's iSchool. Full course information here: http://www.umiacs.umd.edu/~jbg/teaching/INST_414/

From playlist Advanced Data Science

(Original Paper) Latent Dirichlet Allocation (discussions) | AISC Foundational

Toronto Deep Learning Series, 15 November 2018 Paper Review: http://www.jmlr.org/papers/volume3/blei03a/blei03a.pdf Speaker: Renyu Li (Wysdom.ai) Host: Munich Reinsurance Co-Canada Date: Nov 15th, 2018 Latent Dirichlet Allocation We describe latent Dirichlet allocation (LDA), a genera

From playlist Natural Language Processing

Radek Adamczak: Functional inequalities and concentration of measure III

Concentration inequalities are one of the basic tools of probability and asymptotic geo- metric analysis, underlying the proofs of limit theorems and existential results in high dimensions. Original arguments leading to concentration estimates were based on isoperimetric inequalities, whic

From playlist Winter School on the Interplay between High-Dimensional Geometry and Probability

(Original Paper) Latent Dirichlet Allocation (algorithm) | AISC Foundational

Toronto Deep Learning Series, 15 November 2018 Paper Review: http://www.jmlr.org/papers/volume3/blei03a/blei03a.pdf Speaker: Renyu Li (Wysdom.ai) Host: Munich Reinsurance Co-Canada Date: Nov 15th, 2018 Latent Dirichlet Allocation We describe latent Dirichlet allocation (LDA), a genera

From playlist Natural Language Processing

Variational formulae for the capacity induced elliptic differential operators – C. Landim – ICM2018

Mathematical Physics | Probability and Statistics Invited Lecture 11.9 | 12.12 Variational formulae for the capacity induced by second-order elliptic differential operators Claudio Landim Abstract: We review recent progress in potential theory of second-order elliptic operators and on th

From playlist Mathematical Physics

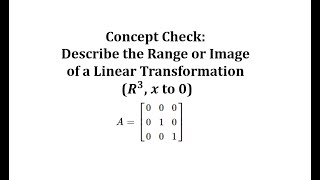

Concept Check: Describe the Range or Image of a Linear Transformation (R3, x to 0)

This video explains how to describe the image or range of a linear transformation.

From playlist Matrix (Linear) Transformations

Henrik Hult: Power-laws and weak convergence of the Kingman coalescent

The Kingman coalescent is a fundamental process in population genetics modelling the ancestry of a sample of individuals backwards in time. In this paper, weak convergence is proved for a sequence of Markov chains consisting of two components related to the Kingman coalescent, under a pare

From playlist Probability and Statistics

Model-based clustering of high-dimensional data: Pitfalls & solutions - David Dunson

Virtual Workshop on Missing Data Challenges in Computation, Statistics and Applications Topic: Model-based clustering of high-dimensional data: Pitfalls & solutions Speaker: David Dunson Date: September 9, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

Statistics: Ch 7 Sample Variability (3 of 14) The Inference of the Sample Distribution

Visit http://ilectureonline.com for more math and science lectures! To donate: http://www.ilectureonline.com/donate https://www.patreon.com/user?u=3236071 We will learn if the number of samples is greater than or equal to 25 then: 1) the distribution of the sample means is a normal distr

From playlist STATISTICS CH 7 SAMPLE VARIABILILTY

(ML 7.9) Posterior distribution for univariate Gaussian (part 1)

Computing the posterior distribution for the mean of the univariate Gaussian, with a Gaussian prior (assuming known prior mean, and known variances). The posterior is Gaussian, showing that the Gaussian is a conjugate prior for the mean of a Gaussian.

From playlist Machine Learning

Francesca Da Lio: Analysis of nonlocal conformal invariant variational problems, Lecture II

There has been a lot of interest in recent years for the analysis of free-boundary minimal surfaces. In the first part of the course we will recall some facts of conformal invariant problems in 2D and some aspects of the integrability by compensation theory. In the second part we will sho

From playlist Hausdorff School: Trending Tools