(ML 14.2) Markov chains (discrete-time) (part 1)

Definition of a (discrete-time) Markov chain, and two simple examples (random walk on the integers, and a oversimplified weather model). Examples of generalizations to continuous-time and/or continuous-space. Motivation for the hidden Markov model.

From playlist Machine Learning

Brain Teasers: 10. Winning in a Markov chain

In this exercise we use the absorbing equations for Markov Chains, to solve a simple game between two players. The Zoom connection was not very stable, hence there are a few audio problems. Sorry.

From playlist Brain Teasers and Quant Interviews

Prob & Stats - Markov Chains (8 of 38) What is a Stochastic Matrix?

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain what is a stochastic matrix. Next video in the Markov Chains series: http://youtu.be/YMUwWV1IGdk

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

Intro to Markov Chains & Transition Diagrams

Markov Chains or Markov Processes are an extremely powerful tool from probability and statistics. They represent a statistical process that happens over and over again, where we try to predict the future state of a system. A markov process is one where the probability of the future ONLY de

From playlist Discrete Math (Full Course: Sets, Logic, Proofs, Probability, Graph Theory, etc)

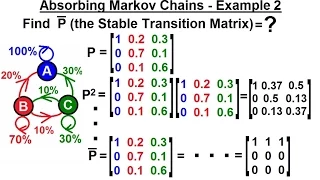

Prob & Stats - Markov Chains (22 of 38) Absorbing Markov Chains - Example 2

Visit http://ilectureonline.com for more math and science lectures! In this video I will find the stable transition matrix in an absorbing Markov chain. Next video in the Markov Chains series: http://youtu.be/hMceS_HIcKY

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

(ML 14.1) Markov models - motivating examples

Introduction to Markov models, using intuitive examples of applications, and motivating the concept of the Markov chain.

From playlist Machine Learning

Prob & Stats - Markov Chains (2 of 38) Markov Chains: An Introduction (Another Method)

Visit http://ilectureonline.com for more math and science lectures! In this video I will introduce an alternative method of solving the Markov chain. Next video in the Markov Chains series: http://youtu.be/ECrsoUtsKq0

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

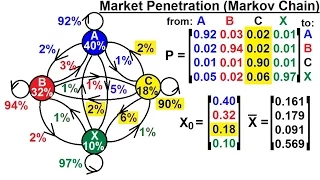

Prob & Stats - Markov Chains (6 of 38) Markov Chain Applied to Market Penetration

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain how Markov chain can be used to introduce a new product into the market. Next video in the Markov Chains series: http://youtu.be/KBCZ7o8XLKU

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

(ML 14.3) Markov chains (discrete-time) (part 2)

Definition of a (discrete-time) Markov chain, and two simple examples (random walk on the integers, and a oversimplified weather model). Examples of generalizations to continuous-time and/or continuous-space. Motivation for the hidden Markov model.

From playlist Machine Learning

Johannes Rauh : Geometry of policy improvment

Recording during the thematic meeting : "Geometrical and Topological Structures of Information" the September 01, 2017 at the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent

From playlist Geometry

Fast learning of small strategies: Jan Křetínský, Technical University of Munich

In verification, precise analysis is required, but the algorithms usually suffer from scalability issues. In machine learning, scalability is achieved, but with only very weak guarantees. We show how to merge the two philosophies and profit from both. In this talk, we focus on analysing Ma

From playlist Logic and learning workshop

Marcelo Pereyra: Bayesian inference and mathematical imaging - Lecture 2: Markov chain Monte Carlo

Bayesian inference and mathematical imaging - Part 2: Markov chain Monte Carlo Abstract: This course presents an overview of modern Bayesian strategies for solving imaging inverse problems. We will start by introducing the Bayesian statistical decision theory framework underpinning Bayesi

From playlist Probability and Statistics

Formal verification and learning of complex systems - Professor Alessandro Abate

For slides, future Logic events and more, please visit: https://logic-data-science.github.io/?page=logic_learning Two known shortcomings of standard techniques in formal verification are the limited capability to provide system-level assertions, and the scalability to large-scale, complex

From playlist Logic and learning workshop

Research talks by Nisheeth Vishno

Second Bangalore School on Population Genetics and Evolution URL: http://www.icts.res.in/program/popgen2016 DESCRIPTION: Just as evolution is central to our understanding of biology, population genetics theory provides the basic framework to comprehend evolutionary processes. Population

From playlist Second Bangalore School on Population Genetics and Evolution

Statistical Rethinking Winter 2019 Lecture 10

Lecture 10 of the Dec 2018 through March 2019 edition of Statistical Rethinking: A Bayesian Course with R and Stan. This lecture covers Chapter 9, Markov Chain Monte Carlo.

From playlist Statistical Rethinking Winter 2019

Dana Randall: Sampling algorithms and phase transitions

Markov chain Monte Carlo methods have become ubiquitous across science and engineering to model dynamics and explore large combinatorial sets. Over the last 20 years there have been tremendous advances in the design and analysis of efficient sampling algorithms for this purpose. One of the

From playlist Probability and Statistics

Some Equations and Games in Evolutionary Biology - Christine Taylor

Some Equations and Games in Evolutionary Biology Christine Taylor Harvard University; Member, School of Mathematics February 14, 2011 The basic ingredients of Darwinian evolution, selection and mutation, are very well described by simple mathematical models. In 1973, John Maynard Smith lin

From playlist Mathematics

From playlist Contributed talks One World Symposium 2020

Markov Chains & Transition Matrices

Part 1 on Markov Chains can be found here: https://www.youtube.com/watch?v=rHdX3ANxofs&ab_channel=Dr.TreforBazett In part 2 we study transition matrices. Using a transition matrix let's us do computation of Markov Chains far more efficiently because determining a future state from some ini

From playlist Discrete Math (Full Course: Sets, Logic, Proofs, Probability, Graph Theory, etc)

Stefan Thonhauser: 3PDMPs in risk theory and QMC integration III

VIRTUAL LECTURE Recording during the meeting "Quasi-Monte Carlo Methods and Applications " the November 05, 2020 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians

From playlist Virtual Conference