(ML 14.3) Markov chains (discrete-time) (part 2)

Definition of a (discrete-time) Markov chain, and two simple examples (random walk on the integers, and a oversimplified weather model). Examples of generalizations to continuous-time and/or continuous-space. Motivation for the hidden Markov model.

From playlist Machine Learning

Prob & Stats - Markov Chains (8 of 38) What is a Stochastic Matrix?

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain what is a stochastic matrix. Next video in the Markov Chains series: http://youtu.be/YMUwWV1IGdk

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

(ML 18.4) Examples of Markov chains with various properties (part 1)

A very simple example of a Markov chain with two states, to illustrate the concepts of irreducibility, aperiodicity, and stationary distributions.

From playlist Machine Learning

Prob & Stats - Markov Chains (10 of 38) Regular Markov Chain

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain what is a regular Markov chain. Next video in the Markov Chains series: http://youtu.be/DeG8MlORxRA

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

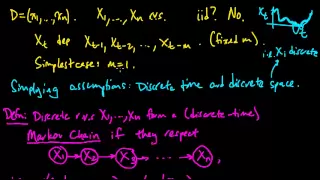

(ML 14.2) Markov chains (discrete-time) (part 1)

Definition of a (discrete-time) Markov chain, and two simple examples (random walk on the integers, and a oversimplified weather model). Examples of generalizations to continuous-time and/or continuous-space. Motivation for the hidden Markov model.

From playlist Machine Learning

11e Machine Learning: Markov Chain Monte Carlo

A lecture on the basics of Markov Chain Monte Carlo for sampling posterior distributions. For many Bayesian methods we must sample to explore the posterior. Here's some basics.

From playlist Machine Learning

Markov Chains : Data Science Basics

The basics of Markov Chains, one of my ALL TIME FAVORITE objects in data science.

From playlist Data Science Basics

Matrix Limits and Markov Chains

In this video I present a cool application of linear algebra in which I use diagonalization to calculate the eventual outcome of a mixing problem. This process is a simple example of what's called a Markov chain. Note: I just got a new tripod and am still experimenting with it; sorry if t

From playlist Eigenvalues

Cécile Mailler : Processus de Pólya à valeur mesure

Résumé : Une urne de Pólya est un processus stochastique décrivant la composition d'une urne contenant des boules de différentes couleurs. L'ensemble des couleurs est usuellement un ensemble fini {1, ..., d}. A chaque instant n, une boule est tirée uniformément au hasard dans l'urne (noton

From playlist Probability and Statistics

18. Countable-state Markov Chains and Processes

MIT 6.262 Discrete Stochastic Processes, Spring 2011 View the complete course: http://ocw.mit.edu/6-262S11 Instructor: Robert Gallager License: Creative Commons BY-NC-SA More information at http://ocw.mit.edu/terms More courses at http://ocw.mit.edu

From playlist MIT 6.262 Discrete Stochastic Processes, Spring 2011

19. Countable-state Markov Processes

MIT 6.262 Discrete Stochastic Processes, Spring 2011 View the complete course: http://ocw.mit.edu/6-262S11 Instructor: Robert Gallager License: Creative Commons BY-NC-SA More information at http://ocw.mit.edu/terms More courses at http://ocw.mit.edu

From playlist MIT 6.262 Discrete Stochastic Processes, Spring 2011

Zeros of polynomials, decay of correlations, and algorithms by Piyush Srivastava

DISCUSSION MEETING : STATISTICAL PHYSICS OF MACHINE LEARNING ORGANIZERS : Chandan Dasgupta, Abhishek Dhar and Satya Majumdar DATE : 06 January 2020 to 10 January 2020 VENUE : Madhava Lecture Hall, ICTS Bangalore Machine learning techniques, especially “deep learning” using multilayer n

From playlist Statistical Physics of Machine Learning 2020

Tom Hutchcroft: Interlacements and the uniform spanning forest

Abstract: The Aldous-Broder algorithm allows one to sample the uniform spanning tree of a finite graph as the set of first-entry edges of a simple random walk. In this talk, I will discuss how this can be extended to infinite transient graphs by replacing the random walk with the random in

From playlist Probability and Statistics

High dimensional expanders - Part 2 - Irit Dinur

Computer Science/Discrete Mathematics Seminar II Topic: High dimensional expanders - Part 2 Speaker: Irit Dinur Affiliation: Weizmann Institute of Science; Visiting Professor, School of Mathematics Date: March 24, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

Johan Segers: Modelling multivariate extreme value distributions via Markov trees

CONFERENCE Recording during the thematic meeting : "Adaptive and High-Dimensional Spatio-Temporal Methods for Forecasting " the September 26, 2022 at the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks

From playlist Probability and Statistics

Prob & Stats - Markov Chains (9 of 38) What is a Regular Matrix?

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain what is a regular matrix. Next video in the Markov Chains series: http://youtu.be/loBUEME5chQ

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

Linear cover time is exponentially unlikely - Quentin Dubroff

Computer Science/Discrete Mathematics Seminar I Topic: Linear cover time is exponentially unlikely Speaker: Quentin Dubroff Affiliation: Rutgers University Date: March 28, 2022 Proving a 2009 conjecture of Itai Benjamini, we show: For any C, there is a greater than 0 such that for any s

From playlist Mathematics

Markoff surfaces and strong approximation - Alexander Gamburd

Special Seminar Topic: Markoff surfaces and strong approximation Speaker: Alexander Gamburd Affiliation: The Graduate Center, The City University of New York Date: December 8, 2017 For more videos, please visit http://video.ias.edu

From playlist Mathematics

Yuval Peres - Breaking barriers in probability

http://www.lesprobabilitesdedemain.fr/index.html Organisateurs : Céline Abraham, Linxiao Chen, Pascal Maillard, Bastien Mallein et la Fondation Sciences Mathématiques de Paris

From playlist Les probabilités de demain 2016

Markov Chains Clearly Explained! Part - 1

Let's understand Markov chains and its properties with an easy example. I've also discussed the equilibrium state in great detail. #markovchain #datascience #statistics For more videos please subscribe - http://bit.ly/normalizedNERD Markov Chain series - https://www.youtube.com/playl

From playlist Markov Chains Clearly Explained!