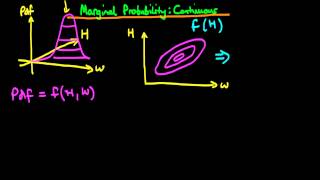

1 - Marginal probability for continuous variables

This explains what is meant by a marginal probability for continuous random variables, how to calculate marginal probabilities and the graphical intuition behind the method. If you are interested in seeing more of the material, arranged into a playlist, please visit: https://www.youtube.c

From playlist Bayesian statistics: a comprehensive course

In this video we cover the idea of marginal cost. This is simply the derivative of the cost function. We can roughly define marginal cost as the cost of producing one additional item. For more videos please visit http://www.mysecretmathtutor.com

From playlist Calculus

What is a marginal probability?

An introduction to the concept of marginal probabilities, via the use of a simple 2 dimensional discrete example. If you are interested in seeing more of the material, arranged into a playlist, please visit: https://www.youtube.com/playlist?list=PLFDbGp5YzjqXQ4oE4w9GVWdiokWB9gEpm For mo

From playlist Bayesian statistics: a comprehensive course

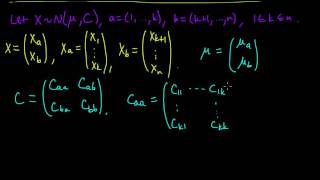

(PP 6.8) Marginal distributions of a Gaussian

For any subset of the coordinates of a multivariate Gaussian, the marginal distribution is multivariate Gaussian.

From playlist Probability Theory

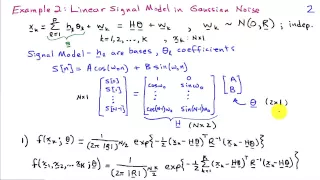

Maximum Likelihood Estimation Examples

http://AllSignalProcessing.com for more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. Three examples of applying the maximum likelihood criterion to find an estimator: 1) Mean and variance of an iid Gaussian, 2) Linear signal model in

From playlist Estimation and Detection Theory

From playlist COMP0168 (2020/21)

http://mathispower4u.wordpress.com/

From playlist Business Applications of Differentiation and Relative Extrema

DeepMind x UCL | Deep Learning Lectures | 11/12 | Modern Latent Variable Models

This lecture, by DeepMind Research Scientist Andriy Mnih, explores latent variable models, a powerful and flexible framework for generative modelling. After introducing this framework along with the concept of inference, which is central to it, Andriy focuses on two types of modern latent

From playlist Learning resources

Sylvia Biscoveanu - Power Spectral Density Uncertainty and Gravitational-Wave Parameter Estimation

Recorded 19 November 2021. Sylvia Biscoveanu of the Massachusetts Institute of Technology presents "The Effect of Power Spectral Density Uncertainty on Gravitational-Wave Parameter Estimation" at IPAM's Workshop III: Source inference and parameter estimation in Gravitational Wave Astronomy

From playlist Workshop: Source inference and parameter estimation in Gravitational Wave Astronomy

From playlist COMP0168 (2020/21)

Lecture 6 | Machine Learning (Stanford)

Lecture by Professor Andrew Ng for Machine Learning (CS 229) in the Stanford Computer Science department. Professor Ng discusses the applications of naive Bayes, neural networks, and support vector machine. This course provides a broad introduction to machine learning and statistical

From playlist Lecture Collection | Machine Learning

Finding the Marginal Cost Function given the Cost Function

Please Subscribe here, thank you!!! https://goo.gl/JQ8Nys Finding the Marginal Cost Function given the Cost Function

From playlist Calculus

Stanford CS229: Machine Learning | Summer 2019 | Lecture 3 - Probability and Statistics

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3potDOW Anand Avati Computer Science, PhD To follow along with the course schedule and syllabus, visit: http://cs229.stanford.edu/syllabus-summer2019.html

From playlist Stanford CS229: Machine Learning Course | Summer 2019 (Anand Avati)

ML Tutorial: Bayesian Machine Learning (Zoubin Ghahramani)

Machine Learning Tutorial at Imperial College London: Bayesian Machine Learning Zoubin Ghahramani (University of Cambridge) January 29, 2014

From playlist Machine Learning Tutorials

A Primer on Gaussian Processes for Regression Analysis || Chris Fonnesbeck

Gaussian processes are flexible probabilistic models that can be used to perform Bayesian regression analysis without having to provide pre-specified functional relationships between the variables. This tutorial will introduce new users to specifying, fitting and validating Gaussian proces

From playlist Machine Learning

Maximum Likelihood For the Normal Distribution, step-by-step!!!

Calculating the maximum likelihood estimates for the normal distribution shows you why we use the mean and standard deviation define the shape of the curve. NOTE: This is another follow up to the StatQuests on Probability vs Likelihood https://youtu.be/pYxNSUDSFH4 and Maximum Likelihood: h

From playlist StatQuest

Fellow Short Talks: Professor Zoubin Ghahramani, University of Cambridge

Bio Zoubin Ghahramani FRS is Professor of Information Engineering at the University of Cambridge, where he leads the Machine Learning Group, and The Alan Turing Institute’s University Liaison Director for Cambridge. He is also the Deputy Academic Director of the Leverhulme Centre for the

From playlist Short Talks

35 - Normal prior and likelihood - posterior predictive distribution

This video provides a derivation of the normal posterior predictive distribution for the case of a normal prior distribution and likelihood. If you are interested in seeing more of the material, arranged into a playlist, please visit: https://www.youtube.com/playlist?list=PLFDbGp5YzjqXQ4o

From playlist Bayesian statistics: a comprehensive course

Pavel Krupskiy - Conditional Normal Extreme-Value Copulas.

Dr Pavel Krupskiy (University of Melbourne) presents “Conditional Normal Extreme-Value Copulas”, 14 August 2020. Seminar organised by UNSW Sydney.

From playlist Statistics Across Campuses