More Info: https://www.caltech.edu/about/news/where-are-my-keys-and-other-memory-based-choices-probed-brain The brain’s memory-retrieval network is composed of many interacting regions. In a new study, Caltech researchers looked at the interaction between two nodes in this network: the me

From playlist Our Research

Neural Networks and Deep Learning

This lecture explores the recent explosion of interest in neural networks and deep learning in the context of 1) vast and increasing data sets, and 2) rapidly improving computational hardware, which have enabled the training of deep neural networks. Book website: http://databookuw.com/

From playlist Intro to Data Science

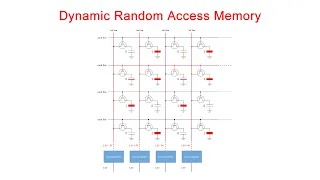

Dynamic Random Access Memory (DRAM). Part 1: Memory Cell Arrays

This is the first in a series of computer science videos is about the fundamental principles of Dynamic Random Access Memory, DRAM, and the essential concepts of DRAM operation. This particular video covers the structure and workings of the DRAM memory cell. That is, the basic unit of st

From playlist Random Access Memory

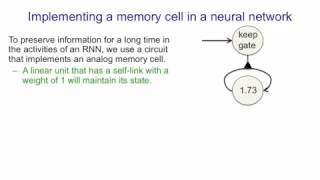

Lecture 7E : Long term short term memory

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 7E : Long term short term memory

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Stanford Seminar - Rethinking Memory System Design for Data-Intensive Computing

"Rethinking Memory System Design for Data-Intensive Computing"- Onur Mutlu of Carnegie Mellon University About the talk: The memory system is a fundamental performance and energy bottleneck in almost all computing systems. Recent system design, application, and technology trends that requ

From playlist Engineering

A Trip Down Memory Lane - Michelle Effros - 6/7/2019

Changing Directions & Changing the World: Celebrating the Carver Mead New Adventures Fund. June 7, 2019 in Beckman Institute Auditorium at Caltech. The symposium features technical talks from Carver Mead New Adventures Fund recipients, alumni, and Carver Mead himself! Since 2014, this Fun

From playlist Carver Mead New Adventures Fund Symposium

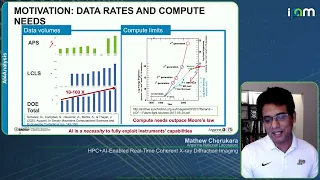

Mathew Cherukara - HPC+AI-Enabled Real-Time Coherent X-ray Diffraction Imaging - IPAM at UCLA

Recorded 14 October 2022. Mathew Cherukara of Argonne National Laboratory presents "HPC+AI-Enabled Real-Time Coherent X-ray Diffraction Imaging" at IPAM's Diffractive Imaging with Phase Retrieval Workshop. Abstract: he capabilities provided by next generation light sources such as the Adva

From playlist 2022 Diffractive Imaging with Phase Retrieval - - Computational Microscopy

Lecture 15/16 : Modeling hierarchical structure with neural nets

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] 15A From Principal Components Analysis to Autoencoders 15B Deep Autoencoders 15C Deep autoencoders for document retrieval and visualization 15D Semantic hashing 15E Learning binary codes for image retrieval 15F Shallo

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Lecture 11.2 — Dealing with spurious minima [Neural Networks for Machine Learning]

Lecture from the course Neural Networks for Machine Learning, as taught by Geoffrey Hinton (University of Toronto) on Coursera in 2012. Link to the course (login required): https://class.coursera.org/neuralnets-2012-001

From playlist [Coursera] Neural Networks for Machine Learning — Geoffrey Hinton

Complete Roadmap to become a Data Scientist | Data Scientist Career | Learn Data Science | Edureka

🔥𝐄𝐝𝐮𝐫𝐞𝐤𝐚 𝐃𝐚𝐭𝐚 𝐒𝐜𝐢𝐞𝐧𝐜𝐞 𝐰𝐢𝐭𝐡 𝐏𝐲𝐭𝐡𝐨𝐧 𝐂𝐞𝐫𝐭𝐢𝐟𝐢𝐜𝐚𝐭𝐢𝐨𝐧 𝐂𝐨𝐮𝐫𝐬𝐞: https://www.edureka.co/data-science-python-certification-course (Use code 𝐘𝐎𝐔𝐓𝐔𝐁𝐄𝟐𝟎 for a flat 20%off on all trainings) This video on 'Data Scientist Roadmap' will help you understand who is a Data Scientist, Data Scientist Roles and

From playlist Data Science Training Videos

Hopfield Networks is All You Need (Paper Explained)

#ai #transformer #attention Hopfield Networks are one of the classic models of biological memory networks. This paper generalizes modern Hopfield Networks to continuous states and shows that the corresponding update rule is equal to the attention mechanism used in modern Transformers. It

From playlist Papers Explained

Recurrent Neural Networks (RNN) and Long Short Term Memory Networks (LSTM)

#RNN #LSTM #DeepLearning #MachineLearning #DataScience #RecurrentNerualNetworks Recurrent Neural Networks or RNN have been very popular and effective with time series data. In this tutorial, we learn about RNNs, the Vanishing Gradient problem and the solution to the problem which is Long

From playlist Deep Learning with Keras - Python

We implement a multilayer perceptron (MLP) character-level language model. In this video we also introduce many basics of machine learning (e.g. model training, learning rate tuning, hyperparameters, evaluation, train/dev/test splits, under/overfitting, etc.). Links: - makemore on github:

From playlist Neural Networks: Zero to Hero

Lecture 11B : Dealing with spurious minima in Hopfield Nets

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 11B : Dealing with spurious minima in Hopfield Nets

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Stanford CS230: Deep Learning | Autumn 2018 | Lecture 10 - Chatbots / Closing Remarks

Andrew Ng, Adjunct Professor & Kian Katanforoosh, Lecturer - Stanford University http://onlinehub.stanford.edu/ Andrew Ng Adjunct Professor, Computer Science Kian Katanforoosh Lecturer, Computer Science To follow along with the course schedule and syllabus, visit: http://cs230.stanfo

From playlist Stanford CS230: Deep Learning | Autumn 2018

This lecture gives an overview of neural networks, which play an important role in machine learning today. Book website: http://databookuw.com/ Steve Brunton's website: eigensteve.com

From playlist Intro to Data Science

Introduction to Neural Re-Ranking

In this lecture we look at the workflow (including training and evaluation) of neural re-ranking models and some basic neural re-ranking architectures. Slides & transcripts are available at: https://github.com/sebastian-hofstaetter/teaching 📖 Check out Youtube's CC - we added our high qua

From playlist Advanced Information Retrieval 2021 - TU Wien

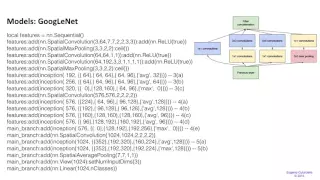

6.2 deep neural network models

From playlist Deep-Learning-Course