Russian Multiplication Algorithm - Intro to Algorithms

This video is part of an online course, Intro to Algorithms. Check out the course here: https://www.udacity.com/course/cs215.

From playlist Introduction to Algorithms

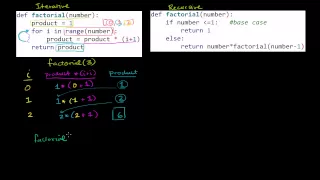

Comparing Iterative and Recursive Factorial Functions

Comparing iterative and recursive factorial functions

From playlist Computer Science

Russian Multiplication Algorithm Solution - Intro to Algorithms

This video is part of an online course, Intro to Algorithms. Check out the course here: https://www.udacity.com/course/cs215.

From playlist Introduction to Algorithms

Russian Peasants Algorithm - Intro to Algorithms

This video is part of an online course, Intro to Algorithms. Check out the course here: https://www.udacity.com/course/cs215.

From playlist Introduction to Algorithms

Laura Grigori: Challenges in achieving scalable and robust linear solvers

This talk focuses on challenges that we address when designing linear solvers that aim at achieving scalability on large scale computers, while also preserving numerical robustness. We will consider preconditioned Krylov subspace solvers. Getting scalability relies on reducing global synch

From playlist Numerical Analysis and Scientific Computing

Today, we diagonialized some matrices, if you know what I mean... It was meant literally. That was what we did. -- Watch live at https://www.twitch.tv/simuleios

From playlist DMRG

PIC Math - Creating More Realistic Animation for Movies - Segment II

Prof. Joseph Teran of the Department of Mathematics at UCLA gives an overview of the numerical linear algebra and iterative method techniques that are used to simulate physical phenomena such as water, fire, smoke, and elastic deformations in the movie and gaming industries.

From playlist PIC Math 2015 - Industrial Math Case Studies

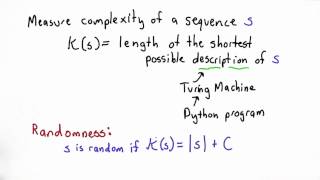

Kolmogorov Complexity - Applied Cryptography

This video is part of an online course, Applied Cryptography. Check out the course here: https://www.udacity.com/course/cs387.

From playlist Applied Cryptography

Functions of Matrices, Lecture 5

Functions of Matrices with Nick Higham from the University of Manchester. Lecture 5

From playlist Gene Golub SIAM Summer School Videos

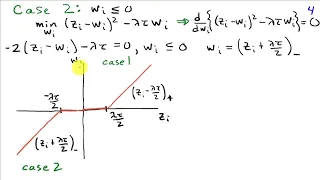

Lecture 12 | Convex Optimization II (Stanford)

Lecture by Professor Stephen Boyd for Convex Optimization II (EE 364B) in the Stanford Electrical Engineering department. Professor Boyd finishes his talk on Sequential Convex Programming and begins a lecture on Conjugate Gradient Methods. This course introduces topics such as subgradie

From playlist Lecture Collection | Convex Optimization

Lec 18 | MIT 18.086 Mathematical Methods for Engineers II

Krylov Methods / Multigrid Continued View the complete course at: http://ocw.mit.edu/18-086S06 License: Creative Commons BY-NC-SA More information at http://ocw.mit.edu/terms More courses at http://ocw.mit.edu

From playlist MIT 18.086 Mathematical Methods for Engineers II, Spring '06

The Loss Landscape of Deep Neural Networks by Shankar Krishnan

DISCUSSION MEETING THE THEORETICAL BASIS OF MACHINE LEARNING (ML) ORGANIZERS: Chiranjib Bhattacharya, Sunita Sarawagi, Ravi Sundaram and SVN Vishwanathan DATE : 27 December 2018 to 29 December 2018 VENUE : Ramanujan Lecture Hall, ICTS, Bangalore ML (Machine Learning) has enjoyed tr

From playlist The Theoretical Basis of Machine Learning 2018 (ML)

Lecture 13 | Convex Optimization II (Stanford)

Lecture by Professor Stephen Boyd for Convex Optimization II (EE 364B) in the Stanford Electrical Engineering department. Professor Boyd continues his lecture on Conjugate Gradient Methods and then starts lecturing on the Truncated Newton Method. This course introduces topics such as su

From playlist Lecture Collection | Convex Optimization

Lec 19 | MIT 18.086 Mathematical Methods for Engineers II

Conjugate Gradient Method View the complete course at: http://ocw.mit.edu/18-086S06 License: Creative Commons BY-NC-SA More information at http://ocw.mit.edu/terms More courses at http://ocw.mit.edu

From playlist MIT 18.086 Mathematical Methods for Engineers II, Spring '06

Peter Benner: Matrix Equations and Model Reduction, Lecture 5

Peter Benner from the Max Planck Institute presents: Matrix Equations and Model Reduction; Lecture 5

From playlist Gene Golub SIAM Summer School Videos

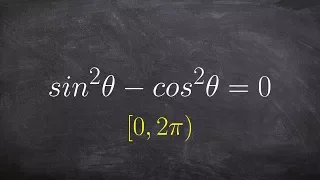

Solving a trigonometric equation with applying pythagorean identity

👉 Learn how to solve trigonometric equations. There are various methods that can be used to evaluate trigonometric equations, they include factoring out the GCF and simplifying the factored equation. Another method is to use a trigonometric identity to reduce and then simplify the given eq

From playlist Solve Trigonometric Equations by Factoring

Martin J. Gander: Multigrid and Domain Decomposition: Similarities and Differences

Both multigrid and domain decomposition methods are so called optimal solvers for Laplace type problems, but how do they compare? I will start by showing in what sense these methods are optimal for the Laplace equation, which will reveal that while both multigrid and domain decomposition a

From playlist Numerical Analysis and Scientific Computing