In this video, I present some applications of artificial neural networks and describe how such networks are typically structured. My hope is to create another video (soon) in which I describe how neural networks are actually trained from data.

From playlist Machine Learning

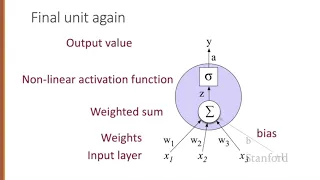

Neural Networks 1 Neural Units

From playlist Week 5: Neural Networks

What is Deep Learning? | Introduction to Deep Learning | Deep Learning Tutorial | Simplilearn

🔥Artificial Intelligence Engineer Program (Discount Coupon: YTBE15): https://www.simplilearn.com/masters-in-artificial-intelligence?utm_campaign=DeepLearning-FbxTVRfQFuI&utm_medium=Descriptionff&utm_source=youtube 🔥Professional Certificate Program In AI And Machine Learning: https://www.si

From playlist Deep Learning Tutorial Videos 🔥[2022 Updated] | Simplilearn

What is Deep Learning | Deep Learning Explained | Deep Learning Tutorial For Beginners | Simplilearn

🔥Artificial Intelligence Engineer Program (Discount Coupon: YTBE15): https://www.simplilearn.com/masters-in-artificial-intelligence?utm_campaign=WhatIsNov18DeepLearning&utm_medium=Descriptionff&utm_source=youtube 🔥Professional Certificate Program In AI And Machine Learning: https://www.sim

From playlist Deep Learning Tutorial Videos 🔥[2022 Updated] | Simplilearn

Serhiy Yanchuk - Adaptive dynamical networks: from multiclusters to recurrent synchronization

Recorded 02 September 2022. Serhiy Yanchuk of Humboldt-Universität presents "Adaptive dynamical networks: from multiclusters to recurrent synchronization" at IPAM's Reconstructing Network Dynamics from Data: Applications to Neuroscience and Beyond. Abstract: Adaptive dynamical networks is

From playlist 2022 Reconstructing Network Dynamics from Data: Applications to Neuroscience and Beyond

FEM@LLNL | Modeling and Controlling Turbulent Flows through Deep Learning

Sponsored by the MFEM project, the FEM@LLNL Seminar Series focuses on finite element research and applications talks of interest to the MFEM community. On August 23, 2022, Ricardo Vinuesa of KTH Royal Institute of Technology presented "Modeling and Controlling Turbulent Flows through Deep

From playlist FEM@LLNL Seminar Series

Practical 4.0 – RNN, vectors and sequences

Recurrent Neural Networks – Vectors and sequences Full project: https://github.com/Atcold/torch-Video-Tutorials Links to the paper Vinyals et al. (2016) https://arxiv.org/abs/1609.06647 Zaremba & Sutskever (2015) https://arxiv.org/abs/1410.4615 Cho et al. (2014) https://arxiv.org/abs/1406

From playlist Deep-Learning-Course

Neural Network Training (Part 3): Gradient Calculation

In this video we will see how to calculate the gradients of a neural network. The gradients are the individual error for each of the weights in the neural network. In the next video we will see how these gradients can be used to modify the weights of the neural network.

From playlist Neural Networks by Jeff Heaton

DDPS | Modeling and controlling turbulent flows through deep learning

Description: The advent of new powerful deep neural networks (DNNs) has fostered their application in a wide range of research areas, including more recently in fluid mechanics. In this presentation, we will cover some of the fundamentals of deep learning applied to computational fluid dyn

From playlist Data-driven Physical Simulations (DDPS) Seminar Series

From playlist Machine Learning Course

Rafael Gómez-Bombarelli: "Coarse graining autoencoders and evolutionary learning of atomistic..."

Machine Learning for Physics and the Physics of Learning 2019 Workshop I: From Passive to Active: Generative and Reinforcement Learning with Physics "Coarse graining autoencoders and evolutionary learning of atomistic potentials" Rafael Gomez-Bombarelli, Massachusetts Institute of Technol

From playlist Machine Learning for Physics and the Physics of Learning 2019

Lecture 7B : Training RNNs with backpropagation

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 7B : Training RNNs with backpropagation

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

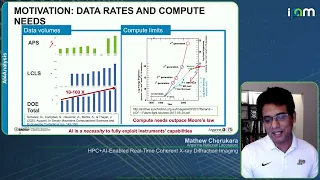

Mathew Cherukara - HPC+AI-Enabled Real-Time Coherent X-ray Diffraction Imaging - IPAM at UCLA

Recorded 14 October 2022. Mathew Cherukara of Argonne National Laboratory presents "HPC+AI-Enabled Real-Time Coherent X-ray Diffraction Imaging" at IPAM's Diffractive Imaging with Phase Retrieval Workshop. Abstract: he capabilities provided by next generation light sources such as the Adva

From playlist 2022 Diffractive Imaging with Phase Retrieval - - Computational Microscopy

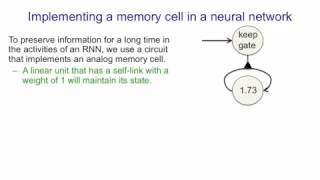

Lecture 7E : Long term short term memory

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 7E : Long term short term memory

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Frank Noé: "Intro to Machine Learning (Part 1/2)"

Watch part 2/2 here: https://youtu.be/7TZnGQrNF6g Machine Learning for Physics and the Physics of Learning Tutorials 2019 "Intro to Machine Learning (Part 1/2)" Frank Noé, Freie Universität Berlin Institute for Pure and Applied Mathematics, UCLA September 5, 2019 For more information:

From playlist Machine Learning for Physics and the Physics of Learning 2019

Deploying Deep Learning Models | Deep Learning for Engineers, Part 5

This video covers the additional work and considerations you need to think about once you have a deep neural network that can classify your data. We need to consider that the trained network is usually part of a larger system and it needs to be incorporated into that design. We also want

From playlist Deep Learning for Engineers

Neural Networks in System Models

In this presentation, you will see how data provides new avenues and connections to physical modeling with the new support for neural networks in system modeling. Applications and examples will be explored.

From playlist Wolfram Technology Conference 2022