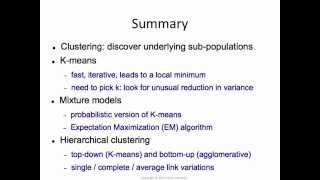

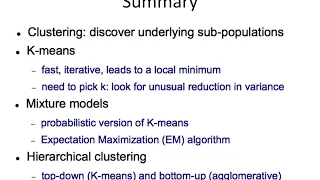

Hierarchical Clustering 5: summary

[http://bit.ly/s-link] Summary of the lecture.

From playlist Hierarchical Clustering

Hierarchical Clustering 3: single-link vs. complete-link

[http://bit.ly/s-link] Agglomerative clustering needs a mechanism for measuring the distance between two clusters, and we have many different ways of measuring such a distance. We explain the similarities and differences between single-link, complete-link, average-link, centroid method and

From playlist Hierarchical Clustering

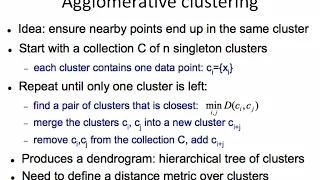

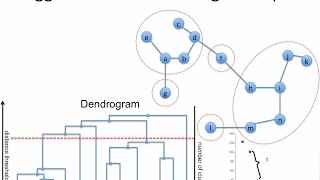

Clustering (2): Hierarchical Agglomerative Clustering

Hierarchical agglomerative clustering, or linkage clustering. Procedure, complexity analysis, and cluster dissimilarity measures including single linkage, complete linkage, and others.

From playlist cs273a

Hierarchical Clustering 4: the Lance-Williams algorithm

[http://bit.ly/s-link] The Lance-Williams algorithm provides a single, efficient algorithm to implement agglomerative clustering for different linkage types. We go over the algorithm and provide the update equations for single-link, complete-link and average-link definitions of inter-clust

From playlist Hierarchical Clustering

How to Cluster Data in MATLAB | K Means Clustering | Hierarchical Clustering in MATLAB | Simplilearn

🔥 Become a Data Analytics expert (Coupon Code: YTBE15): https://www.simplilearn.com/data-analyst-masters-certification-training-course?utm_campaign=5April2023HowtoClusterDatainMATLAB&utm_medium=DescriptionFirstFold&utm_source=youtube 🔥 Professional Certificate Program In Data Analytics

From playlist Matlab

NVAE: A Deep Hierarchical Variational Autoencoder (Paper Explained)

VAEs have been traditionally hard to train at high resolutions and unstable when going deep with many layers. In addition, VAE samples are often more blurry and less crisp than those from GANs. This paper details all the engineering choices necessary to successfully train a deep hierarchic

From playlist Papers Explained

Martina Scolamiero 9/15/21: Extracting persistence features with hierarchical stabilisation

Title: Extracting persistence features with hierarchical stabilisation Abstract: It is often complicated to understand complex correlation patterns between multiple measurements on a dataset. In multi-parameter persistence we represent them through algebraic objects called persistence mo

From playlist AATRN 2021

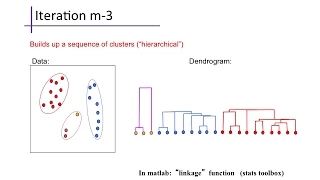

Hierarchical Clustering | Hierarchical Clustering in R |Agglomerative Clustering |Simplilearn

This video on hierarchical clustering will help you understand what is clustering, what is hierarchical clustering, how does hierarchical clustering work, what is agglomerative clustering, what is divisive clustering and you will also see a demo on how to group states based on their sales

From playlist Data Science For Beginners | Data Science Tutorial🔥[2022 Updated]

V-2: Hierarchical clustering with Python: sklearn, scipy | data analysis | Unsupervised | Discovery

In this super chapter, we'll cover the discovery of clusters or groups through the agglomerative hierarchical grouping technique using the WHOLE CUSTOMER DATA (fresh, milk, grocery, ...) with python JUPYTER NOTEBOOK. Pandas libraries for data manipulation, matplotlib for creation of graph

From playlist Python

Matthew Schofield - Genetic maps from genotype-by-sequencing data

Matthew Schofield (University of Otago) presents "Genetic maps from genotype-by-sequencing data", 5 June 2020.

From playlist Statistics Across Campuses

Data Science - Part VII - Cluster Analysis

For downloadable versions of these lectures, please go to the following link: http://www.slideshare.net/DerekKane/presentations https://github.com/DerekKane/YouTube-Tutorials This lecture provides an overview of clustering techniques, including K-Means, Hierarchical Clustering, and Gauss

From playlist Data Science

From playlist Hierarchical Clustering