(ML 14.4) Hidden Markov models (HMMs) (part 1)

Definition of a hidden Markov model (HMM). Description of the parameters of an HMM (transition matrix, emission probability distributions, and initial distribution). Illustration of a simple example of a HMM.

From playlist Machine Learning

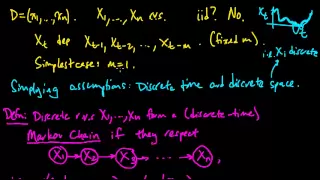

(ML 14.2) Markov chains (discrete-time) (part 1)

Definition of a (discrete-time) Markov chain, and two simple examples (random walk on the integers, and a oversimplified weather model). Examples of generalizations to continuous-time and/or continuous-space. Motivation for the hidden Markov model.

From playlist Machine Learning

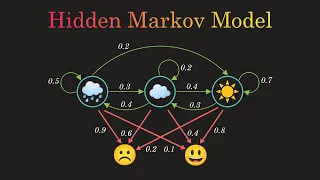

Hidden Markov Model Clearly Explained! Part - 5

So far we have discussed Markov Chains. Let's move one step further. Here, I'll explain the Hidden Markov Model with an easy example. I'll also show you the underlying mathematics. #markovchain #datascience #statistics For more videos please subscribe - http://bit.ly/normalizedNERD Mar

From playlist Markov Chains Clearly Explained!

(ML 14.3) Markov chains (discrete-time) (part 2)

Definition of a (discrete-time) Markov chain, and two simple examples (random walk on the integers, and a oversimplified weather model). Examples of generalizations to continuous-time and/or continuous-space. Motivation for the hidden Markov model.

From playlist Machine Learning

How to Estimate the Parameters of a Hidden Markov Model from Data [Lecture]

This is a single lecture from a course. If you you like the material and want more context (e.g., the lectures that came before), check out the whole course: https://boydgraber.org/teaching/CMSC_723/ (Including homeworks and reading.) Intro to HMMs: https://youtu.be/0gu1BDj5_Kg Music: h

From playlist Computational Linguistics I

Data Science - Part XIII - Hidden Markov Models

For downloadable versions of these lectures, please go to the following link: http://www.slideshare.net/DerekKane/presentations https://github.com/DerekKane/YouTube-Tutorials This lecture provides an overview on Markov processes and Hidden Markov Models. We will start off by going throug

From playlist Data Science

(ML 14.5) Hidden Markov models (HMMs) (part 2)

Definition of a hidden Markov model (HMM). Description of the parameters of an HMM (transition matrix, emission probability distributions, and initial distribution). Illustration of a simple example of a HMM.

From playlist Machine Learning

Hidden Markov Model : Data Science Concepts

All about the Hidden Markov Model in data science / machine learning

From playlist Data Science Concepts

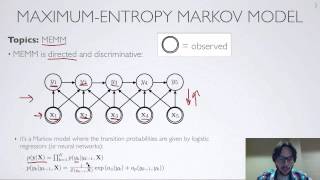

Conditional Random Fields : Data Science Concepts

My Patreon : https://www.patreon.com/user?u=49277905 Hidden Markov Model : https://www.youtube.com/watch?v=fX5bYmnHqqE Part of Speech Tagging : https://www.youtube.com/watch?v=fv6Z3ZrAWuU Viterbi Algorithm : https://www.youtube.com/watch?v=IqXdjdOgXPM 0:00 Recap HMM 4:07 Limitations of

From playlist Data Science Concepts

Lecture 12/16 : Restricted Boltzmann machines (RBMs)

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] 12A The Boltzmann Machine learning algorithm 12B More efficient ways to get the statistics 12C Restricted Boltzmann Machines 12D An example of Contrastive Divergence Learning 12E RBMs for collaborative filtering

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Time Series class: Part 2 - Professor Chis Williams, University of Edinburgh

Part 1: https://youtu.be/vDl5NVStQwU Introduction: Moving average, Autoregressive and ARMA models. Parameter estimation, likelihood based inference and forecasting with time series. Advanced: State-space models (hidden Markov models, Kalman filter) and applications. Recurrent neural netw

From playlist Data science classes

Geoffrey Hinton: "A Computational Principle that Explains Sex, the Brain, and Sparse Coding"

Graduate Summer School 2012: Deep Learning, Feature Learning "A Computational Principle that Explains Sex, the Brain, and Sparse Coding" Geoffrey Hinton, University of Toronto Institute for Pure and Applied Mathematics, UCLA July 11, 2012 For more information: https://www.ipam.ucla.edu/

From playlist GSS2012: Deep Learning, Feature Learning

Lecture 12B : More efficient ways to get the statistics

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 12B : More efficient ways to get the statistics

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

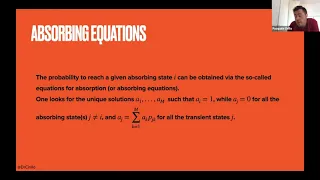

Brain Teasers: 10. Winning in a Markov chain

In this exercise we use the absorbing equations for Markov Chains, to solve a simple game between two players. The Zoom connection was not very stable, hence there are a few audio problems. Sorry.

From playlist Brain Teasers and Quant Interviews

Lecture 12.2 — More efficient ways to get the statistics [Neural Networks for Machine Learning]

Lecture from the course Neural Networks for Machine Learning, as taught by Geoffrey Hinton (University of Toronto) on Coursera in 2012. Link to the course (login required): https://class.coursera.org/neuralnets-2012-001

From playlist [Coursera] Neural Networks for Machine Learning — Geoffrey Hinton