Linear Algebra for Computer Scientists. 12. Introducing the Matrix

This computer science video is one of a series of lessons about linear algebra for computer scientists. This video introduces the concept of a matrix. A matrix is a rectangular or square, two dimensional array of numbers, symbols, or expressions. A matrix is also classed a second order

From playlist Linear Algebra for Computer Scientists

What is a matrix? Free ebook http://tinyurl.com/EngMathYT

From playlist Intro to Matrices

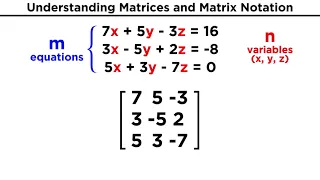

Understanding Matrices and Matrix Notation

In order to do linear algebra, we will have to know how to use matrices. So what's a matrix? It's just an array of numbers listed in a grid of particular dimensions that can represent the coefficients and constants from a system of linear equations. They're fun, I promise! Let's just start

From playlist Mathematics (All Of It)

Example of Gram-Schmidt Orthogonalization

Linear Algebra: Construct an orthonormal basis of R^3 by applying the Gram-Schmidt orthogonalization process to (1, 1, 1), (1, 0, 1), and (1, 1, 0). In addition, we show how the Gram-Schmidt equations allow one to factor an invertible matrix into an orthogonal matrix times an upper tria

From playlist MathDoctorBob: Linear Algebra I: From Linear Equations to Eigenspaces | CosmoLearning.org Mathematics

How do we add matrices. A matrix is an abstract object that exists in its own right, and in this sense, it is similar to a natural number, or a complex number, or even a polynomial. Each element in a matrix has an address by way of the row in which it is and the column in which it is. Y

From playlist Introducing linear algebra

We have already looked at the column view of a matrix. In this video lecture I want to expand on this topic to show you that each matrix has a column space. If a matrix is part of a linear system then a linear combination of the columns creates a column space. The vector created by the

From playlist Introducing linear algebra

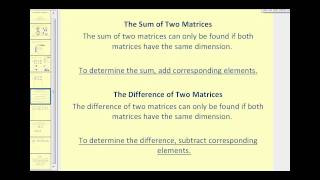

Matrix Addition, Subtraction, and Scalar Multiplication

This video shows how to add, subtract and perform scalar multiplication with matrices. http://mathispower4u.yolasite.com/ http://mathispower4u.wordpress.com/

From playlist Introduction to Matrices and Matrix Operations

2 Construction of a Matrix-YouTube sharing.mov

This video shows you how a matrix is constructed from a set of linear equations. It helps you understand where the various elements in a matrix comes from.

From playlist Linear Algebra

N-grams | Introduction to Text Analytics with R Part 6

N-grams includes specific coverage of: • Validate the effectiveness of TF-IDF in improving model accuracy. • Introduce the concept of N-grams as an extension to the bag-of-words model to allow for word ordering. • Discuss the trade-offs involved of N-grams and how Text Analytics suffers f

From playlist Introduction to Text Analytics with R

Computational Linear Algebra 10: QR Algorithm to find Eigenvalues, Implementing QR Decomposition

Course materials available here: https://github.com/fastai/numerical-linear-algebra We discuss the QR algorithm to find eigenvalues, and a few ways to implement the QR factorization. - QR algorithm - Linear algebra projections - Gram-Schmidt - Householder - Stability Examples Course overv

From playlist Computational Linear Algebra

17. Orthogonal Matrices and Gram-Schmidt

MIT 18.06 Linear Algebra, Spring 2005 Instructor: Gilbert Strang View the complete course: http://ocw.mit.edu/18-06S05 YouTube Playlist: https://www.youtube.com/playlist?list=PLE7DDD91010BC51F8 17. Orthogonal Matrices and Gram-Schmidt License: Creative Commons BY-NC-SA More information a

From playlist MIT 18.06 Linear Algebra, Spring 2005

Lecture 11: Minimizing ‖x‖ Subject to Ax = b

MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 Instructor: Gilbert Strang View the complete course: https://ocw.mit.edu/18-065S18 YouTube Playlist: https://www.youtube.com/playlist?list=PLUl4u3cNGP63oMNUHXqIUcrkS2PivhN3k In this lecture, P

From playlist MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018

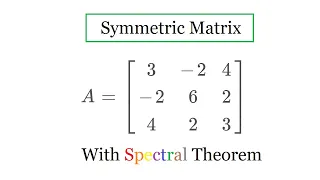

Diagonalizing a symmetric matrix. Orthogonal diagonalization. Finding D and P such that A = PDPT. Finding the spectral decomposition of a matrix. Featuring the Spectral Theorem Check out my Symmetric Matrices playlist: https://www.youtube.com/watch?v=MyziVYheXf8&list=PLJb1qAQIrmmD8boOz9a8

From playlist Symmetric Matrices

Stanford ENGR108: Introduction to Applied Linear Algebra | 2020 | Lecture 29-VMLS mtrx pwrs & QR fac

Professor Stephen Boyd Samsung Professor in the School of Engineering Director of the Information Systems Laboratory To follow along with the course schedule and syllabus, visit: https://web.stanford.edu/class/engr108/ To view all online courses and programs offered by Stanford, visit:

From playlist Stanford ENGR108: Introduction to Applied Linear Algebra —Vectors, Matrices, and Least Squares

VSM, LSA, & SVD | Introduction to Text Analytics with R Part 7

Part 7 of this video series includes specific coverage of: – The trade-offs of expanding the text analytics feature space with n-grams. – How bag-of-words representations map to the vector space model (VSM). – Usage of the dot product between document vectors as a proxy for correlation. –

From playlist Introduction to Text Analytics with R

Gram-Schmidt Process: Find an Orthogonal Basis (3 Vectors in R3)

This video explains how determine an orthogonal basis given a basis for a subspace.

From playlist Orthogonal and Orthonormal Sets of Vectors

This video defines elementary matrices and then provides several examples of determining if a given matrix is an elementary matrix. Site: http://mathispower4u.com Blog: http://mathispower4u.wordpress.com

From playlist Augmented Matrices

Lec 14 | MIT 18.085 Computational Science and Engineering I, Fall 2008

Lecture 14: Exam Review License: Creative Commons BY-NC-SA More information at http://ocw.mit.edu/terms More courses at http://ocw.mit.edu

From playlist MIT 18.085 Computational Science & Engineering I, Fall 2008