The Generalized Likelihood Ratio Test

http://AllSignalProcessing.com for more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. There is no universally optimal test strategy for composite hypotheses (unknown parameters in the pdfs). The generalized likelihood ratio test (GLRT

From playlist Estimation and Detection Theory

Uncertainty Estimation via (Multi) Calibration

A Google TechTalk, presented by Aaron Roth, 2020/10/02 Paper Title: "Moment Multi-calibration and Uncertainty Estimation" ABSTRACT: We show how to achieve multi-calibrated estimators not just for means, but also for variances and other higher moments. Informally, this means that we can fi

From playlist Differential Privacy for ML

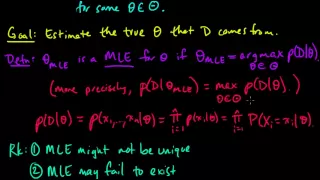

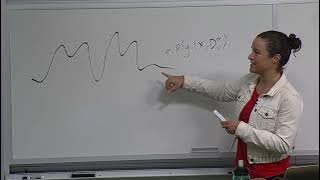

(ML 4.1) Maximum Likelihood Estimation (MLE) (part 1)

Definition of maximum likelihood estimates (MLEs), and a discussion of pros/cons. A playlist of these Machine Learning videos is available here: http://www.youtube.com/my_playlists?p=D0F06AA0D2E8FFBA

From playlist Machine Learning

Percent Uncertainty In Measurement

This video tutorial provides a basic introduction into percent uncertainty. It also discusses topics such as estimated uncertainty, absolute uncertainty, and relative uncertainty. This video provides an example explaining how to calculate the percent uncertainty in the volume of the sphe

From playlist New Physics Video Playlist

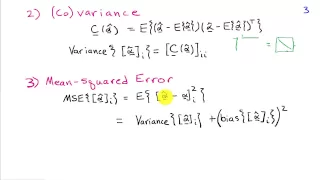

Introduction to Estimation Theory

http://AllSignalProcessing.com for more great signal-processing content: ad-free videos, concept/screenshot files, quizzes, MATLAB and data files. General notion of estimating a parameter and measures of estimation quality including bias, variance, and mean-squared error.

From playlist Estimation and Detection Theory

(ML 4.2) Maximum Likelihood Estimation (MLE) (part 2)

Definition of maximum likelihood estimates (MLEs), and a discussion of pros/cons. A playlist of these Machine Learning videos is available here: http://www.youtube.com/my_playlists?p=D0F06AA0D2E8FFBA

From playlist Machine Learning

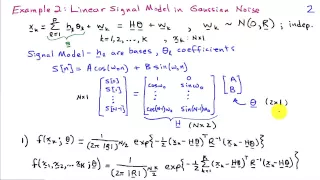

Maximum Likelihood Estimation Examples

http://AllSignalProcessing.com for more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. Three examples of applying the maximum likelihood criterion to find an estimator: 1) Mean and variance of an iid Gaussian, 2) Linear signal model in

From playlist Estimation and Detection Theory

Marcelo Pereyra: Bayesian inference and mathematical imaging - Lecture 3

Bayesian inference and mathematical imaging - Part 3: probability and convex optimisation Abstract: This course presents an overview of modern Bayesian strategies for solving imaging inverse problems. We will start by introducing the Bayesian statistical decision theory framework underpin

From playlist Probability and Statistics

Sylvia Biscoveanu - Power Spectral Density Uncertainty and Gravitational-Wave Parameter Estimation

Recorded 19 November 2021. Sylvia Biscoveanu of the Massachusetts Institute of Technology presents "The Effect of Power Spectral Density Uncertainty on Gravitational-Wave Parameter Estimation" at IPAM's Workshop III: Source inference and parameter estimation in Gravitational Wave Astronomy

From playlist Workshop: Source inference and parameter estimation in Gravitational Wave Astronomy

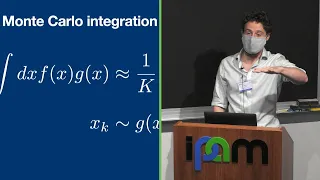

Colm Talbot - Adventures in practical population inference - IPAM at UCLA

Recorded 16 November 2021. Colm Talbot of the Massachusetts Institute of Technology presents "Adventures in practical population inference" at IPAM's Workshop III: Source inference and parameter estimation in Gravitational Wave Astronomy. Abstract: Population inference provides our most

From playlist Workshop: Source inference and parameter estimation in Gravitational Wave Astronomy

Stanford CS330: Deep Multi-task and Meta Learning | 2020 | Lecture 8 - Bayesian Meta-Learning

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai To follow along with the course, visit: https://cs330.stanford.edu/ To view all online courses and programs offered by Stanford, visit: http://online.stanford.

From playlist Stanford CS330: Deep Multi-task and Meta Learning | Autumn 2020

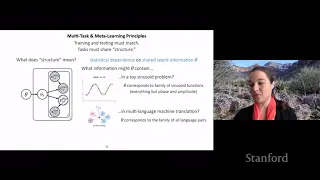

Stanford CS330: Multi-Task and Meta-Learning, 2019 | Lecture 5 - Bayesian Meta-Learning

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai Assistant Professor Chelsea Finn, Stanford University http://cs330.stanford.edu/

From playlist Stanford CS330: Deep Multi-Task and Meta Learning

Stanford CS330 Deep Multi-Task & Meta Learning - Bayesian Meta-Learning l 2022 I Lecture 12

For more information about Stanford's Artificial Intelligence programs visit: https://stanford.io/ai To follow along with the course, visit: https://cs330.stanford.edu/ To view all online courses and programs offered by Stanford, visit: http://online.stanford.edu Chelsea Finn Computer

From playlist Stanford CS330: Deep Multi-Task and Meta Learning I Autumn 2022

On the Use of (Linear) Surrogate Models for Bayesian Inverse Problems

42nd Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series Talk Date: Wednesday, April 13, 10:00am Eastern Speaker: Ru Nicholson, University of Auckland Abstract: In this talk we consider the use of surrogate (forward) models to efficiently solve Bayesian inverse pr

From playlist Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series

Maximum likelihood estimation of GARCH parameters (FRM T2-26)

[My xls is here https://trtl.bz/2NlLn7d] GARCH(1,1) is the popular approach to estimating volatility, but its disadvantage (compared to STDDEV or EWMA) is that you need to fit three parameters. Maximum likelihood estimation, MLE, is an immensely useful statistical approach that can be used

From playlist Quantitative Analysis (FRM Topic 2)

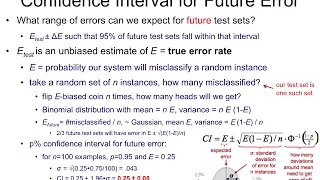

From playlist COMP0168 (2020/21)

Nando de Freitas: "An Informal Mathematical Tour of Feature Learning, Pt. 2"

Graduate Summer School 2012: Deep Learning, Feature Learning "An Informal Mathematical Tour of Feature Learning, Pt. 2" Nando de Freitas, University of British Columbia Institute for Pure and Applied Mathematics, UCLA July 26, 2012 For more information: https://www.ipam.ucla.edu/program

From playlist GSS2012: Deep Learning, Feature Learning