Solving an equation with multiple decimals

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist How to Solve One Step Equations with Decimals

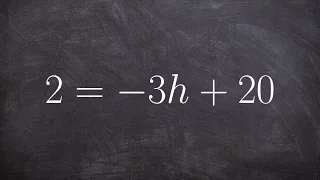

Solve for the zeros of a linear equation

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations

Solving an equation with variable on the same side

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations with Two Variables

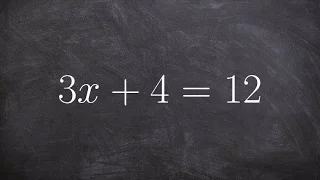

Learning to solve a two step equation with inverse operations 2y+5=19

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations

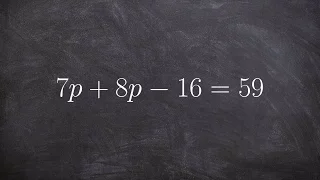

Solving an equation by combining like terms

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations with Two Variables

Nonlinear algebra, Lecture 11: "Semidefinite Programming", by Bernd Sturmfels

This is the eleventh lecture in the IMPRS Ringvorlesung, the advanced graduate course at the Max Planck Institute for Mathematics in the Sciences.

From playlist IMPRS Ringvorlesung - Introduction to Nonlinear Algebra

Gerard Cornuejols: Dyadic linear programming

A finite vector is dyadic if each of its entries is a dyadic rational number, i.e. if it has an exact floating point representation. We study the problem of finding a dyadic optimal solution to a linear program, if one exists. This is joint work with Ahmad Abdi, Bertrand Guenin and Levent

From playlist Workshop: Continuous approaches to discrete optimization

Francisco Criado: The dual 1-fair packing problem and applications to linear programming

Proportional fairness (also known as 1-fairness) is a fairness scheme for the resource allocation problem introduced by Nash in 1950. Under this scheme, an allocation for two players is unfair if a small transfer of resources between two players results in a proportional increase in the ut

From playlist Workshop: Tropical geometry and the geometry of linear programming

Delayed column generation in large scale integer optimization problems - Professor Raphael Hauser

Mixed linear integer programming problems play an important role in many applications of decision mathematics, including data science. Algorithms typically solve such problems via a sequence of linear programming approximations and a divide-and-conquer approach (branch-and-bound, branch-an

From playlist Data science classes

Association schemes and codes I: The Delsarte linear program - Leonardo Coregliano

Computer Science/Discrete Mathematics Seminar II Topic: Association schemes and codes I: The Delsarte linear program Speaker: Leonardo Coregliano Affiliation: Member, School of Mathematics Date: May 10, 2022 One of the central problems of coding theory is to determine the trade-off betw

From playlist Mathematics

Aaron Sidford: Introduction to interior point methods for discrete optimization, lecture II

Over the past decade interior point methods (IPMs) have played a pivotal role in mul- tiple algorithmic advances. IPMs have been leveraged to obtain improved running times for solving a growing list of both continuous and combinatorial optimization problems including maximum flow, bipartit

From playlist Summer School on modern directions in discrete optimization

Lecture 8 | Convex Optimization I (Stanford)

Professor Stephen Boyd, of the Stanford University Electrical Engineering department, lectures on duality in the realm of electrical engineering and how it is utilized in convex optimization for the course, Convex Optimization I (EE 364A). Convex Optimization I concentrates on recognizi

From playlist Lecture Collection | Convex Optimization

Lec 29 | MIT 18.085 Computational Science and Engineering I

Applications in signal and image processing: compression A more recent version of this course is available at: http://ocw.mit.edu/18-085f08 License: Creative Commons BY-NC-SA More information at http://ocw.mit.edu/terms More courses at http://ocw.mit.edu

From playlist MIT 18.085 Computational Science & Engineering I, Fall 2007

Support Vector Machines (2): Dual & soft-margin forms

Lagrangian optimization for the SVM objective; dual form of the SVM; soft-margin SVM formulation; hinge loss interpretation

From playlist cs273a

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations

Decision Making and Inference Under Model Misspecification by Jose Blanchet

PROGRAM: ADVANCES IN APPLIED PROBABILITY ORGANIZERS: Vivek Borkar, Sandeep Juneja, Kavita Ramanan, Devavrat Shah, and Piyush Srivastava DATE & TIME: 05 August 2019 to 17 August 2019 VENUE: Ramanujan Lecture Hall, ICTS Bangalore Applied probability has seen a revolutionary growth in resear

From playlist Advances in Applied Probability 2019

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations