(PP 6.1) Multivariate Gaussian - definition

Introduction to the multivariate Gaussian (or multivariate Normal) distribution.

From playlist Probability Theory

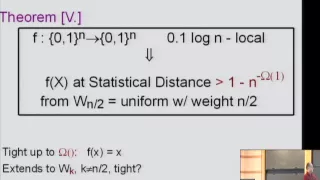

The Complexity of Distributions - Emanuele Viola

Emanuele Viola Northeastern University March 5, 2012 Complexity theory, with some notable exceptions, typically studies the complexity of computing a function h(x) of a *given* input x. We advocate the study of the complexity of generating -- or sampling -- the output distribution h(x) for

From playlist Mathematics

(ML 7.7.A1) Dirichlet distribution

Definition of the Dirichlet distribution, what it looks like, intuition for what the parameters control, and some statistics: mean, mode, and variance.

From playlist Machine Learning

Distributions - Statistical Inference

In this video I talk about distribution, how to visualize it and also provide a concrete definition for it.

From playlist Statistical Inference

(ML 3.7) The Big Picture (part 3)

How the core concepts and methods in machine learning arise naturally in the course of solving the decision theory problem. A playlist of these Machine Learning videos is available here: http://www.youtube.com/my_playlists?p=D0F06AA0D2E8FFBA

From playlist Machine Learning

Breaking the Communication-Privacy-Accuracy Trilemma

A Google TechTalk, 2020/7/29, presented by Ayfer Ozgur Aydin, Stanford University ABSTRACT: Two major challenges in distributed learning and estimation are 1) preserving the privacy of the local samples; and 2) communicating them efficiently to a central server, while achieving high accura

From playlist 2020 Google Workshop on Federated Learning and Analytics

What is a Sampling Distribution?

Intro to sampling distributions. What is a sampling distribution? What is the mean of the sampling distribution of the mean? Check out my e-book, Sampling in Statistics, which covers everything you need to know to find samples with more than 20 different techniques: https://prof-essa.creat

From playlist Probability Distributions

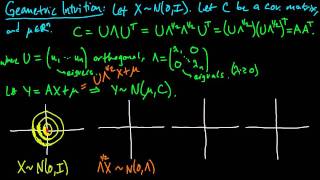

(PP 6.6) Geometric intuition for the multivariate Gaussian (part 1)

How to visualize the effect of the eigenvalues (scaling), eigenvectors (rotation), and mean vector (shift) on the density of a multivariate Gaussian.

From playlist Probability Theory

Understanding the Central Limit Theorem

From playlist Unit 7 Probability C: Sampling Distributions & Simulation

Benjamin Guedj: On generalisation and learning

A (condensed) primer on PAC-Bayes, followed by News from the PAC-Bayes frontline. LMS Computer Science Colloquium 2021

From playlist LMS Computer Science Colloquium Nov 2021

Probability & Information Theory — Subject 5 of Machine Learning Foundations

#MLFoundations #Probability #MachineLearning Welcome to my course on Probability and Information Theory, which is part of my broader "Machine Learning Foundations" curriculum. This video is an orientation to the curriculum. There are eight subjects covered comprehensively in the ML Found

From playlist Probability for Machine Learning

Statistical mechanics of deep learning by Surya Ganguli

Statistical Physics Methods in Machine Learning DATE: 26 December 2017 to 30 December 2017 VENUE: Ramanujan Lecture Hall, ICTS, Bengaluru The theme of this Discussion Meeting is the analysis of distributed/networked algorithms in machine learning and theoretical computer science in the

From playlist Statistical Physics Methods in Machine Learning

Statistical Learning Theory for Modern Machine Learning - John Shawe-Taylor

Seminar on Theoretical Machine Learning Topic: Statistical Learning Theory for Modern Machine Learning Speaker: John Shawe-Taylor Affiliation: University College London Date: August 11, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

Learning probability distributions; What can, What can't be done - Shai Ben-David

Seminar on Theoretical Machine Learning Topic: Learning probability distributions; What can, What can't be done Speaker: Shai Ben-David Affiliation: University of Waterloo Date: May 7, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

A New Physics-Inspired Theory of Deep Learning | Optimal initialization of Neural Nets

A special video about recent exciting developments in mathematical deep learning! 🔥 Make sure to check out the video if you want a quick visual summary over contents of the “The principles of deep learning theory” book https://deeplearningtheory.com/. SPONSOR: Aleph Alpha 👉 https://app.al

From playlist Explained AI/ML in your Coffee Break

Phiala Shanahan: "Machine learning for lattice field theory"

Machine Learning for Physics and the Physics of Learning 2019 Workshop I: From Passive to Active: Generative and Reinforcement Learning with Physics "Machine learning for lattice field theory" Phiala Shanahan, Massachusetts Institute of Technology (MIT) Abstract: I will discuss opportuni

From playlist Machine Learning for Physics and the Physics of Learning 2019

Seminar 9: Surya Ganguli - Statistical Physics of Deep Learning

MIT RES.9-003 Brains, Minds and Machines Summer Course, Summer 2015 View the complete course: https://ocw.mit.edu/RES-9-003SU15 Instructor: Surya Ganguli Describes how the application of methods from statistical physics to the analysis of high-dimensional data can provide theoretical insi

From playlist MIT RES.9-003 Brains, Minds and Machines Summer Course, Summer 2015

Tightening information-theoretic generalization bounds with data-dependent estimate... - Daniel Roy

Workshop on Theory of Deep Learning: Where next? Topic: Tightening information-theoretic generalization bounds with data-dependent estimates with an application to SGLD Speaker: Daniel Roy Affiliation: University of Toronto Date: October 15, 2019 For more video please visit http://video

From playlist Mathematics

ICML 2018: Tutorial Session: Toward the Theoretical Understanding of Deep Learning

Watch this video with AI-generated Table of Content (ToC), Phrase Cloud and In-video Search here: https://videos.videoken.com/index.php/videos/icml-2018-tutorial-session-toward-the-theoretical-understanding-of-deep-learning/

From playlist ML @ Scale

(PP 6.7) Geometric intuition for the multivariate Gaussian (part 2)

How to visualize the effect of the eigenvalues (scaling), eigenvectors (rotation), and mean vector (shift) on the density of a multivariate Gaussian.

From playlist Probability Theory