The code is accesible at https://github.com/sepinouda/Machine-Learning

From playlist Machine Learning Course

Bala Krishnamoorthy (10/20/20): Dimension reduction: An overview

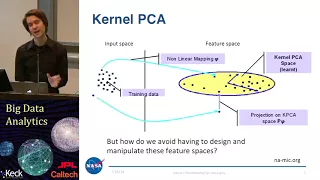

Bala Krishnamoorthy (10/20/20): Dimension reduction: An overview Title: Dimension reduction: An overview Abstract: We present a broad overview of various dimension reduction techniques. Referred to also as manifold learning, we review linear dimension reduction techniques, e.g., principa

From playlist Tutorials

How can we mitigate the curse of dimensionality?

#machinelearning #shorts #datascience

From playlist Quick Machine Learning Concepts

The concept of “dimension” in measured signals

This is part of an online course on covariance-based dimension-reduction and source-separation methods for multivariate data. The course is appropriate as an intermediate applied linear algebra course, or as a practical tutorial on multivariate neuroscience data analysis. More info here:

From playlist Dimension reduction and source separation

Dimensionality Reduction | Introduction to Data Mining part 14

In this Data Mining Fundamentals tutorial, we discuss the curse of dimensionality and the purpose of dimensionality reduction for data preprocessing. When dimensionality increases, data becomes increasingly sparse in the space that it occupies. Dimensionality reduction will help you avoid

From playlist Introduction to Data Mining

UMAP explained | The best dimensionality reduction?

UMAP explained! The great dimensionality reduction algorithm in one video with a lot of visualizations and a little code. Uniform Manifold Approximation and Projection for all! 📺 PCA video: https://youtu.be/3AUfWllnO7c 📺 Curse of dimensionality video: https://youtu.be/4v7ngaiFdp4 💻 Babyp

From playlist Dimensionality reduction. The basics.

PCA 2: dimensionality reduction

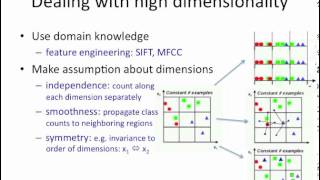

Full lecture: http://bit.ly/PCA-alg We can deal with high dimensionality in three ways: (1) use domain knowledge if available, (2) make an assumption that makes parameter estimation easier, or (3) reduce the dimensionality of the data. Dimensionality reduction can be done via feature sele

From playlist Principal Component Analysis

DDPS | Model reduction with adaptive enrichment for large scale PDE constrained optimization

Talk Abstract Projection based model order reduction has become a mature technique for simulation of large classes of parameterized systems. However, several challenges remain for problems where the solution manifold of the parameterized system cannot be well approximated by linear subspa

From playlist Data-driven Physical Simulations (DDPS) Seminar Series

Assaf Naor: Coarse dimension reduction

Recording during the thematic meeting "Non Linear Functional Analysis" the March 7, 2018 at the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians on CIRM's Audiovisual Ma

From playlist Analysis and its Applications

08 Machine Learning: Dimensionality Reduction

A lecture on dimensionality reduction through feature selection and feature projection. Includes curse of dimensionality and feature selection review from lecture 5 and summary of methods for feature projection.

From playlist Machine Learning

DDPS | Neural Galerkin schemes with active learning for high-dimensional evolution equations

Title: Neural Galerkin schemes with active learning for high-dimensional evolution equations Speaker: Benjamin Peherstorfer (New York University) Description: Fitting parameters of machine learning models such as deep networks typically requires accurately estimating the population loss

From playlist Data-driven Physical Simulations (DDPS) Seminar Series

Dimensionality Reduction : Data Science Concepts

Why would we want to reduce the number of features ? And how do we do it ? PCA Video : https://www.youtube.com/watch?v=dhK8nbtii6I LASSO Video : https://www.youtube.com/watch?v=jbwSCwoT51M My Patreon : https://www.patreon.com/user?u=49277905

From playlist Data Science Concepts

The Drinfeld-Sokolov reduction of admissible representations of affine Lie algebras - Gurbir Dhillon

Workshop on Representation Theory and Geometry Topic: The Drinfeld--Sokolov reduction of admissible representations of affine Lie algebras Speaker: Gurbir Dhillon Affiliation: Yale University Date: April 03, 2021 For more video please visit http://video.ias.edu

From playlist Mathematics