Information theory | Entropy and information

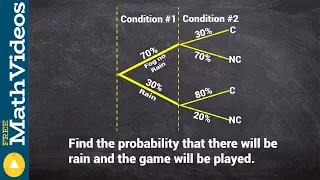

Conditional mutual information

In probability theory, particularly information theory, the conditional mutual information is, in its most basic form, the expected value of the mutual information of two random variables given the value of a third. (Wikipedia).