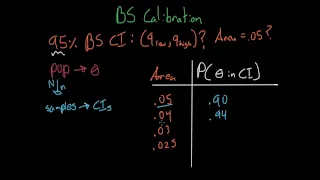

Bootstrap Calibration - Statistical Inference

In this video I introduce you to bootstrap calibration, a technique for improving your confidence intervals, and explain how and why it is so useful.

From playlist Statistical Inference

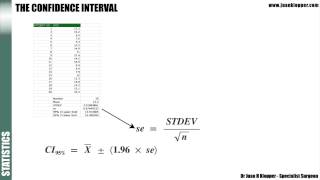

Statistics 5_1 Confidence Intervals

In this lecture explain the meaning of a confidence interval and look at the equation to calculate it.

From playlist Medical Statistics

More Help with Expected Value of Discrete Random Variables

Additional insight into calculating the mean [expected vale] of joint discrete random variables

From playlist Unit 6 Probability B: Random Variables & Binomial Probability & Counting Techniques

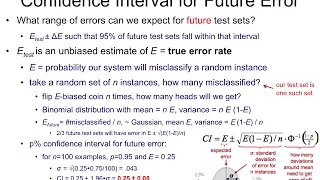

Uncertainty Estimation via (Multi) Calibration

A Google TechTalk, presented by Aaron Roth, 2020/10/02 Paper Title: "Moment Multi-calibration and Uncertainty Estimation" ABSTRACT: We show how to achieve multi-calibrated estimators not just for means, but also for variances and other higher moments. Informally, this means that we can fi

From playlist Differential Privacy for ML

(PP 6.1) Multivariate Gaussian - definition

Introduction to the multivariate Gaussian (or multivariate Normal) distribution.

From playlist Probability Theory

EEVblog #420 - What Is Calibration?

Peter Daly, metrologist at Agilents world leading standards & calibration laboratory in Melbourne explains what calibration is. Forum Topic: http://www.eevblog.com/forum/blog/eevblog-420-what-is-calibration/ EEVblog Main Web Site: http://www.eevblog.com EEVblog Amazon Store: http://astor

From playlist Calibration & Standards

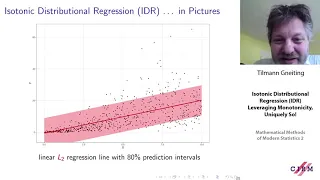

Tilmann Gneiting: Isotonic Distributional Regression (IDR) - Leveraging Monotonicity, Uniquely So!

CIRM VIRTUAL EVENT Recorded during the meeting "Mathematical Methods of Modern Statistics 2" the June 02, 2020 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians

From playlist Virtual Conference

How to Keep Your Students Engaged in Online Learning

32% of students have said they are unwilling to enroll in courses this fall because of their dislike of online learning. In this webinar, Kritik, an OpenStax Ally, shared how peer assessment is used by leading educators around the world to increase the engagement rates of their students in

From playlist OpenStax webinars

Inconsistency in Conference Peer Review: Revisiting the 2014 NeurIPS Experiment (Paper Explained)

#neurips #peerreview #nips The peer-review system at Machine Learning conferences has come under much criticism over the last years. One major driver was the infamous 2014 NeurIPS experiment, where a subset of papers were given to two different sets of reviewers. This experiment showed th

From playlist Papers Explained

Testing for Glucose Required Practical | Revision for Biology A-level

I want to help you achieve the grades you (and I) know you are capable of; these grades are the stepping stone to your future. Even if you don't want to study science or maths further, the grades you get now will open doors in the future. Tutoring - We can match you with an experienced t

From playlist AQA A-Level Biology | Ultimate Revision Playlist

Z Test of Proportion Using Calculator

Using a TI calculator to perform a Z Test on an unknown population proportion [a 1 Proportion Z test or 1Prop Z Test]

From playlist Unit 8: Hypothesis Tests & Confidence Intervals for Single Means & for Single Proportions

Patient-Reported Outcomes for Public Health and Clinical Research: The PROMIS Initiative

A public health seminar recorded on February 7, 2011 featuring Dr. David Cella, Ph.D. Cella is a Professor and Chair of the Department of Medical Social Sciences, Northwestern University Feinberg School of Medicine.

From playlist Graduate Seminar in Public Health 2010-2011

Katrien Antonio: Pricing and reserving with an occurrence and development modelfor non-life...

CONFERENCE Recording during the thematic meeting : "MLISTRAL" the September 29, 2022 at the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians on CIRM's Audiovisual Mathem

From playlist Probability and Statistics

Patricia Schmidt - Introduction to Systematics - IPAM at UCLA

Recorded 19 November 2021. Patricia Schmidt of the University of Birmingham presents "Introduction to Systematics" at IPAM's Workshop III: Source inference and parameter estimation in Gravitational Wave Astronomy. Learn more online at: http://www.ipam.ucla.edu/programs/workshops/workshop-i

From playlist Workshop: Source inference and parameter estimation in Gravitational Wave Astronomy

Ferrier: Modeling Global Change in Compositional Diversity

Simon Ferrier explains three major biodiversity assessment challenges and provides his methods for tackling these challenges.

From playlist Spatial Biodiversity Science and Conservation

Caltech Science Exchange Conversations on COVID-19: Wearable Technology for Temperature Monitoring

Chiara Daraio, Caltech’s G. Bradford Jones Professor of Mechanical Engineering and Applied Physics, talks with Caltech content and media strategist Robert Perkins about how her work contributes to new wearable medical devices, and about a new algorithm that could help people predict their

From playlist Caltech Science Exchange

Deep Uncertainty of Climate Sensitivity Estimates: Sources and Implications

Klaus Keller, Penn State University, delivers a lecture entitled, "Deep Uncertainty of Climate Sensitivity Estimates: Sources and Implications", during the YCEI conference, "Uncertainty in Climate Change: A Conversation with Climate Scientists and Economists".

From playlist Uncertainty in Climate Change: A Conversation with Climate Scientists and Economists

Conditional Probability: Bayes’ Theorem – Disease Testing (Table and Formula)

This video shows how to determine conditional probability using a table and using Bayes' theorem. @mathipower4u

From playlist Probability