Numerical Optimization Algorithms: Constant and Diminishing Step Size

In this video we discuss two simple techniques for choosing the step size in a numerical optimization algorithm. Topics and timestamps: 0:00 – Introduction 1:15 – Constant step size 14:37 – Diminishing step size 25:05 - Summary All Optimization videos in a single playlist (https://www.

From playlist Optimization

Ex: Convert Height in Feet and Inches to Inches, Centimeters, and Meters

This video explains how to convert a height given in feet and inches to inches, centimeters, and meters. Site: http://mathispower4u.com Blog: http://mathispower4u.com

From playlist Unit Conversions: Converting Between Standard and Metric Units

How to calculate Samples Size Proportions

Introduction on how to calculate samples sizes from proportions. Describes the relationship of sample size and proportion. Like us on: http://www.facebook.com/PartyMoreStudyLess

From playlist Sample Size

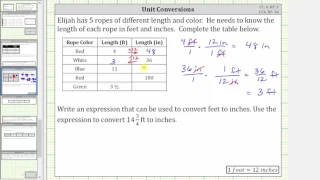

Convert Inches to Feet and Feet to Inches (CC:6.RP.3)

This video explains how to convert inches to feet and feet to inches using a proportion. http://mathispower4u.com

From playlist Unit Conversions: American or Standard Units

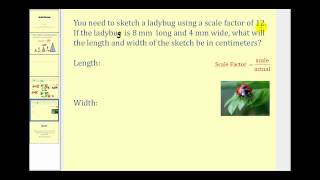

This video shows how to use unit scale to determine the actual dimensions of a model and how to determine the dimensions of a model from an actual dimensions. http://mathispower4u.yolasite.com/

From playlist Unit Scale and Scale Factor

A video on how to calculate the sample size. Includes discussion on how the standard deviation impacts sample size too. Like us on: http://www.facebook.com/PartyMoreStudyLess Related Video How to calculate Samples Size Proportions http://youtu.be/LGFqxJdk20o

From playlist Sample Size

Numerical Optimization Algorithms: Step Size Via Line Minimization

In this video we discuss how to choose the step size in a numerical optimization algorithm using the Line Minimization technique. Topics and timestamps: 0:00 – Introduction 2:30 – Single iteration of line minimization 22:58 – Numerical results with line minimization 30:13 – Challenges wit

From playlist Optimization

Numerical Optimization Algorithms: Gradient Descent

In this video we discuss a general framework for numerical optimization algorithms. We will see that this involves choosing a direction and step size at each step of the algorithm. In this video, we investigate how to choose a direction using the gradient descent method. Future videos d

From playlist Optimization

Alina Ene: Adaptive gradient descent methods for constrained optimization

Adaptive gradient descent methods, such as the celebrated Adagrad algorithm (Duchi, Hazan, and Singer; McMahan and Streeter) and ADAM algorithm (Kingma and Ba), are some of the most popular and influential iterative algorithms for optimizing modern machine learning models. Algorithms in th

From playlist Workshop: Continuous approaches to discrete optimization

Stochastic Gradient Descent: where optimization meets machine learning- Rachel Ward

2022 Program for Women and Mathematics: The Mathematics of Machine Learning Topic: Stochastic Gradient Descent: where optimization meets machine learning Speaker: Rachel Ward Affiliation: University of Texas, Austin Date: May 26, 2022 Stochastic Gradient Descent (SGD) is the de facto op

From playlist Mathematics

Signaling Noise in Bacterial Chemotaxis by Shakuntala Chatterjee

PROGRAM STATISTICAL BIOLOGICAL PHYSICS: FROM SINGLE MOLECULE TO CELL ORGANIZERS: Debashish Chowdhury (IIT-Kanpur, India), Ambarish Kunwar (IIT-Bombay, India) and Prabal K Maiti (IISc, India) DATE: 11 October 2022 to 22 October 2022 VENUE: Ramanujan Lecture Hall 'Fluctuation-and-noise' a

From playlist STATISTICAL BIOLOGICAL PHYSICS: FROM SINGLE MOLECULE TO CELL (2022)

ch9 8. Adaptive Runge-Kutta-Fehlberg method. Wen Shen

Wen Shen, Penn State University. Lectures are based on my book: "An Introduction to Numerical Computation", published by World Scientific, 2016. See promo video: https://youtu.be/MgS33HcgA_I

From playlist CMPSC/MATH 451 Videos. Wen Shen, Penn State University

Lecture 6E : rmsprop: Divide the gradient by a running average of its recent magnitude

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 6E : rmsprop: Divide the gradient by a running average of its recent magnitude

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Lecture 6.5 — Rmsprop: normalize the gradient [Neural Networks for Machine Learning]

Lecture from the course Neural Networks for Machine Learning, as taught by Geoffrey Hinton (University of Toronto) on Coursera in 2012. Link to the course (login required): https://class.coursera.org/neuralnets-2012-001

From playlist [Coursera] Neural Networks for Machine Learning — Geoffrey Hinton

Learning Rate Grafting: Transferability of Optimizer Tuning (Machine Learning Research Paper Review)

#grafting #adam #sgd The last years in deep learning research have given rise to a plethora of different optimization algorithms, such as SGD, AdaGrad, Adam, LARS, LAMB, etc. which all claim to have their special peculiarities and advantages. In general, all algorithms modify two major th

From playlist Papers Explained

Lecture 6/16 : Optimization: How to make the learning go faster

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] 6A Overview of mini-batch gradient descent 6B A bag of tricks for mini-batch gradient descent 6C The momentum method 6D A separate, adaptive learning rate for each connection 6E rmsprop: Divide the gradient by a runni

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

New Simulink Solvers for Hybrid Systems: ode1be and odeN

Learn about the potential benefits of the new Simulink® ODE solvers odeN and ode1be. You’ll see a model containing frequent switching that will simulate faster when using either of these new solvers. - Physical Modeling | Developer Tech Showcase Playlist: https://www.youtube.com/playlist?

From playlist Modeling and Simulation | Developer Tech Showcase

This video shows how to use scale to determine the dimensions of a proportional model. http://mathispower4u.yolasite.com/

From playlist Unit Scale and Scale Factor